Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

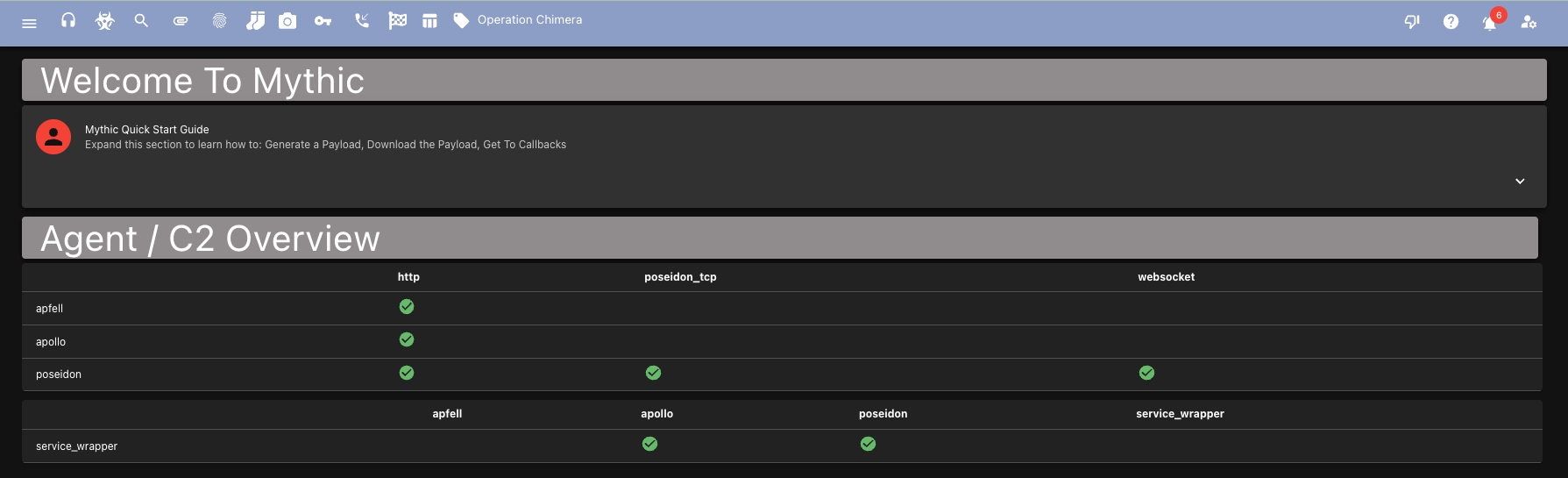

A cross-platform, post-exploit, red teaming framework designed to provide a collaborative and user friendly interface for operators.

Mythic is a multiplayer, command and control platform for red teaming operations. It is designed to facilitate a plug-n-play architecture where new agents, communication channels, and modifications can happen on the fly. Some of the Mythic project's main goals are to provide quality of life improvements to operators, improve maintainability of agents, enable customizations, and provide more robust data analytic capabilities to operations.

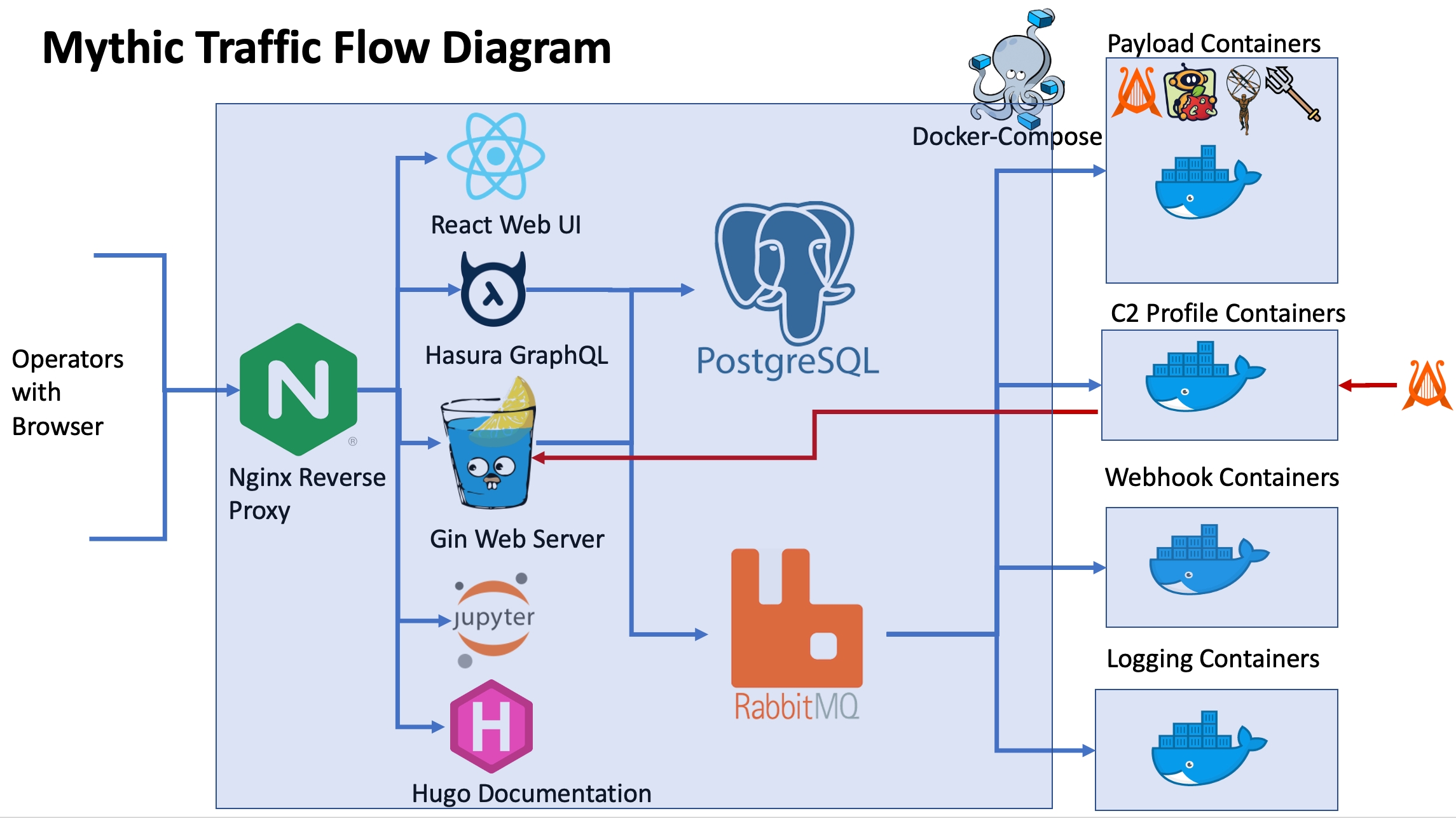

Fundamentally, Mythic uses a web-based front end (React) and Docker containers for the back-end. A GoLang server handles the bulk of the web requests via GraphQL APIs and WebSockets. This server then handles connections to the PostgreSQL database and communicates to the other Docker containers via RabbitMQ. This enables the individual components to be on separate physical computers or in different virtual machines if desired.

A helpful view of the current state of the C2 Profiles and Agents for Mythic can be found here:

A reverse Nginx proxy provides a single port to connect through to reach back-end services. Through this reverse proxy, operators can connect to:

React UI

Hugo documentation container (documentation on a per-agent and per-c2 profile basis)

Hasura GraphQL console (test GraphQL queries, explore/modify the database)

Jupyter Notebook (with Mythic Scripting pre-installed and pre-created examples)

Data modeling, tracking, and analysis are core aspects of Mythic's ability to provide quality of life improvements to operators. From the very beginning of creating a payload, Mythic tracks the specific command and control profile parameters used, the commands loaded into the payload and their versions, who created it, when, and why. All of this is used to provide a more coherent operational view when a new callback checks in. Simple questions such as “which payload triggered this callback”, "who issued this task", and even “why is there a new callback” now all have contextual data to give answers. From here, operators can start automatically tracking their footprints in the network for operational security (OpSec) concerns and to help with deconflictions. All agents and commands can track process creates, file writes, API calls, network connections, and more. These artifacts can be automatically recorded when the task is issued or even reported back by agents as they happen.

Mythic also incorporates MITRE ATT&CK mappings into the standard workflow. All commands can be tagged with ATT&CK techniques, which will propagate to the corresponding tasks issued as well. In a MITRE ATT&CK Matrix view (and ATT&CK Navigator view), operators can view coverage of possible commands as well as coverage of commands issued in an operation. Operators can also provide comments on each task as they operate to help make notes about why a task was important. Additionally, to make deconfliction and reporting easier, all commands, output, and comments can be globally searched through the main web interface.

Check out the code on GitHub: https://github.com/its-a-feature/Mythic

Join the #Mythic channel in the BloodHound public slack

Reach out on Twitter: @its_a_feature_

By default, the server will bind to 0.0.0.0 on port 7443 with a self-signed certificate(unless otherwise configured). This IP is an alias meaning that it will be listening on all IPv4 addresses on the machine. Browse to either https://127.0.0.1:7443 if you’re on the same machine that’s running the server, or you can browse to any of the IPv4 addresses on the machine that’s running the server.

Browse to the server with any modern web browser. You will be automatically redirected to the /login url. This url is protected by allowed_ip_blocks .

The default username is mythic_admin and the default password is randomized. The password is stored in Mythic/.env after first launch, but you can also view it with sudo ./mythic-cli config get MYTHIC_ADMIN_PASSWORD. You can opt to set this before you initially start if you want (or you can change this later through the UI) by setting that environment variable before staring Mythic for the first time.

Mythic uses JSON Web Tokens (JWT) for authentication. When you use the browser (vs the API on the command line), Mythic stores your access and refresh tokens in a cookie as well as in the local session storage. This should be seamless as long as you leave the server running; however, the history of the refresh tokens is saved in memory. So, if you authenticate in the browser, then restart the server, you’ll have to sign in again.

If you're using Chrome and a self-signed certificate that's default generated by Mythic, you will probably see a warning like this when you try to connect:

This is fine and expected since we're not using a LetsEncrypt or a proper domain certificate. To get around this, simply click somewhere within the window and type thisisunsafe. Your browser will now Temporarily accept the cert and allow you through.

At some point in the future, your browser will decide to remind you that you're using a self-signed certificate. Mythic cannot actually read this error message due to Chrome's security policies. When this happens, simply refresh your page. You'll be brought back to the same big warning page as the image above and you can type thisisunsafe again to continue your operations.

Mythic is meant to be used by multiple operators working together to accomplish operations. That typically means there's a lead operator, multiple other operators, and potentially people that are just spectating. Let's see how operators come into play throughout Mythic.

Every user has their own password for authenticating to Mythic. On initial startup, one account is created with a password specified via the MYTHIC_ADMIN_PASSWORD environment variable or by creating a Mythic/.env file with MYTHIC_ADMIN_PASSWORD=passwordhere entry. This account can then be used to provision other accounts (or they can be created via the scripting ability). If the admin password isn't specified via the environment variable or via the Mythic/.env file, then a random password is used. This password is only used on initial setup to create the first user, after that this value is no longer used.

Every user's password must be at least 12 characters long. If somebody tries to log in with an unknown account, all operations will get a notification about it. Similarly, if a user fails to log into their account 10 times in a row, the account will lock. The only account that will not lock out is that initial account that's created. Instead, that account will throttle authentication attempts to 1 a minute.

There are a few different kinds of operator permissions throughout Mythic.

Admin - This is a global setting that grants users the ability to see all operations, unlock all callbacks, and interact with everything in Mythic. The only account that has this initially is the first account created and only Admin accounts can grant other admin accounts this level of permission. Similarly, only admin accounts can remove admin permissions from other admin accounts.

Operation Admin - This is the lead of a specific operation. The operation admin can unlock anybody else's callback, can bypass any opsec check, and has full rights over that operation.

Operator - This is the normal permissions for a user. They can be added to operations by Admins or by the Operation Admin, and within an operation, they can bypass opsec checks for operators and only unlock the callbacks they locked.

Spectator - This account permission has no permissions to make any modifications within Mythic for their operation. They can still query and see all tasks/responses/artifacts/etc within an operation, but they cannot issue tasks, lock callbacks, create payloads, etc.

You might run into the situation where you need to add people to your server, but don't want to pre-create accounts and passwords for each person (and hope they change their password). Instead, you can generate an invite link for each operator. The creation and use of each one is tracked within Mythic's operational event log in the UI, so you know exactly who created each link and which new user was created as a result.

Invite links are disabled by default, but they can be enabled via the .env config (MYTHIC_SERVER_ALLOW_INVITE_LINKS) or via the global settings in the UI via an admin. Each link can be used only once and you can track un-used links in the UI as well. This information isn't stored in the database, so these invite links are deleted/unusable after a server restart.

From the operator settings page, there's an option to view invite links that have been generated but not used. These can be deleted so that they can't be used at all in case you want to revoke an invite link that was sent out.

Bot accounts are unique accounts that cannot log in directly to Mythic, but can have APITokens and perform actions. Bot accounts are automatically created for each operation when a new operation is created, but can also be created by admin accounts.

Bot accounts can be assigned to operations and given different roles/blocks lists just like other operators in an operation.

Mythic uses docker containers to logically separate different components and functions. There are two main categories:

Mythic's main core. This consists of docker containers stood up with docker-compose:

mythic_server - An GoLang gin webserver instance

mythic_postgres - An instance of a postgresql database

mythic_rabbitmq - An instance of a rabbitmq container for message passing between containers

mythic_nginx - A instance of a reverse Nginx proxy

mythic_graphql - An instance of a Hasura GraphQL server

mythic_jupyter - An instance of a Jupyter notebook

mythic_documentation - An instance of a Hugo webserver for localized documentation

Installed Services

Any folder in Mythic/InstalledServices will be treated like a docker container (payload types, c2 profiles, webhooks, loggers, translation containers, etc)

To stop a specific container, run sudo ./mythic-cli stop {container name} .

If you want to reset all of the data in the database, use sudo ./mythic-cli database reset.

If you want to start/restart any specific payload type container, you can do sudo ./mythic-cli start {payload_type_name} and just that container will start/restart. If you want to start multiple, just do spaces between them: sudo ./mythic-cli start {container 1} {container 2}.

All of Mythic's containers share a single docker-compose file. When you install an agent or C2 Profile this docker-compose file will automatically be updated. However, you can always add/remove from this file via mythic-cli and list out what's registered in the docker-compose file vs what you have available on your system:

This makes it easy to track what's available to you and what you're currently using.

Operators connect via a browser to the main Mythic server, a GoLang gin web server. This main Mythic server connects to a PostgreSQL database where information about the operations lives. Each of these are in their own docker containers. When Mythic needs to talk to any payload type container or c2 profile container, it does so via RabbitMQ, which is in its own docker container as well.

When an agent calls back, it connects through these c2 profile containers which have the job of transforming whatever the c2 profile specific language/style is back into the normal RESTful API calls that the Mythic server needs.

./mythic-cli add apfell

[+] Successfully updated docker-compose.yml

/mythic-cli remove http

[+] Successfully updated docker-compose.yml

How to install Mythic and agents in an offline environment

Install Mythic following the normal installation

With Mythic running, install any other agents or profiles you might need/want.

sudo ./mythic-cli install github https://github.com/MythicAgents/Apollo3. Export your docker containers. Make sure you also save the tags.

docker save $(docker images -q) -o mythic_images.tar

docker images | sed '1d' | awk '{print $1 " " $2 " " $3}' > mythic_tags4. Download donut from pypi. (this is apollo specific, so there might be others depending on your agent)

mkdir Payload_Types/apollo/depends

pip3 download donut -d Payload_Types/apollo/dependsDownload Apollo dependencies (apollo specifically installs these dynamically within the Docker container at build-time, so pre-fetch these)

wget https://www.nuget.org/api/v2/package/Fody/2.0.0 -O Payload_Types/apollo/depends/fody.2.0.0.nupkg

wget https://www.nuget.org/api/v2/package/Costura.Fody/1.6.2 -O Payload_Types/apollo/depends/costura.fody.1.6.2.nupkg5. Tar Mythic directoy.

tar cfz mythic.tar.gz /Mythic6. Push mythic_images.tar, mythic_tags, and mythic.tar.gz to your offline box.

7. Import docker images and restore tags.

docker load -i mythic_images.tar

while read REPOSITORY TAG IMAGE_ID; do echo "== Tagging $REPOSITORY $TAG $IMAGE_ID =="; docker tag "$IMAGE_ID" "$REPOSITORY:$TAG"; done < mythic_tags8. Extract Mythic directory.

tar xfz mythic.tar.gz

cd mythic9. Update Apollo's Dockerfile (at the time of use, it might not be 0.1.1 anymore, check Container Syncing the latest). This is apollo specific, so you might need to copy in pieces for other agents/c2 profiles depending on what components they dynamically try to install.

from itsafeaturemythic/csharp_payload:0.1.1

COPY ["depends/donut-0.2.2.tar.gz", "donut-0.2.2.tar.gz"]

COPY ["depends/costura.fody.1.6.2.nupkg", "costura.fody.1.6.2.nupkg"]

COPY ["depends/fody.2.0.0.nupkg", "fody.2.0.0.nupkg"]

RUN /usr/local/bin/python3.8 -m pip install /donut-0.2.2.tar.gz

RUN mkdir /mythic_nuget

RUN nuget sources add -name mythic_nuget -source /mythic_nuget

RUN nuget sources disable -name nuget.org

RUN nuget add /fody.2.0.0.nupkg -source /mythic_nuget

RUN nuget add /costura.fody.1.6.2.nupkg -source /mythic_nuget10. Start Mythic

sudo ./mythic-cli startComments are a single text description that can be added to any task, file, credential, etc in an operation. All members of the operation can see and modify the comment, but the last person that adds or modifies it will show up as the one that added it.

Comments can be found in many places throughout Mythic. On almost any page where you see a task and output, you'll be able to see task comments. These comments can be added by selecting the dropdown for the task status and selecting comment. When there is a comment, you can click the chat bubble icon to show/hide them.

Comments can be removed by either clicking the red trash icon or editing the comment to be a blank string "".

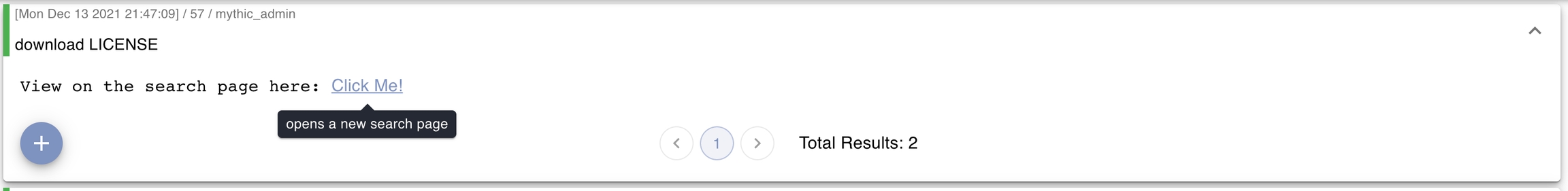

Comments are a nice way to highlight certain tasks and output as important for later use, but just like everything else, they can easily get lost in an operation. When searching across any primary object on the search page (tasks, files, credentials, etc), you can opt to search by comment as well.

Commands keep track of a wealth of information such as name, description, help information, if it needs admin permissions, the current version, any parameters, artifacts, MITRE ATT&CK mappings, which payload type the command corresponds to, who created or last editing the command, and when. That is a lot of information, so let’s break that down a bit.

Every command is different – some take no parameters, some take arrays, strings, integers, or a number of other things. To help accommodate this, you can add parameters to commands so that operators know what they need to be providing. You must give each parameter a name and they must be unique within that command. You can also indicate if the parameter is required or not.

Parameters can be in conditional parameter "groups" - this allows you to say things like parameter X and parameter Y are mutually exclusive, but you should always supply parameter W. As an operator, if there are any parameter groups for a command and you don't provide enough parameters to determine which group to use, Mythic will throw a warning and ask you to use shift + enter to force the modal popup. From here, there's a dropdown at the top to change the group you're looking at to see which parameters to enter.

If a command takes named parameters, but none are supplied on the command line, a GUI modal will pop up to assist the operator.

There is no absolute requirement that the input parameters be in JSON format, it's just recommended.

Screenshots for an entire operation can be accessed via the camera icon in the top bar or the search page.

The screenshots display as they're coming in and will indicate how many chunks are left before you have the full image. At any point you can click on the image and view what's available so far.

History of MythicTips

This page describes how messages flow within Mythic

The following subpages have Mermaid sequence diagrams explaining how messages flow amongst the various microservices for Mythic when doing things like creating payloads, issuing tasks, and trasnferring files.

There's a lot of moving pieces within Mythic and its agents, so it's helpful to take a step back and see how messages are flowing between the different components.

Here we can see an operator issue tasking to the Mythic server. The Mythic server registers the task as "preprocessing" and informs the operator that it got the task. Mythic then sends the task off to the corresponding Payload Type container for processing. The container looks up the corresponding command python file, parses the arguments, validates the arguments, and passes the resulting parameters to the create_tasking function. This function can leverage a bunch of RPC functionality going back to Mythic to register files, send output, etc. When it's done, it sends the final parameters back to Mythic which updates the Task to either Submitted or Error. Now that the task is out of the preprocessing state, when an agent checks in, it can receive the task.

What happens when you want to transfer a file from Mythic -> Agent? There's two different options: tracking a file via a UUID and pulling down chunks or just sending the file as part of your tasking.

This is an example of an operator uploading a file, it getting processed at the Payload Type's create_tasking function where it tracks and registers the file within Mythic. Now the tasking has a UUID for the file rather than the file contents itself. This allows Mythic and the Agent to uniquely reference a file. The agent gets tasking, sees the file id, and submits more requests to fetch the file. Upon finally getting the full file, it resolves the relative upload path into an absolute path and sends an update back to Mythic to let it know that the file the operator said to upload to ./test is actually at /abs/pah/to/test on the target host.

Conversely, you can opt to not track the file (or track the file within Mythic, but not send the UUID down to the agent). In this case, you can't easily reference the same instance of the file between the Agent and Mythic:

You're able to upload and transfer the file just fine, but when it comes to reporting back information on it, Mythic and the Agent can't agree on the same file, so it doesn't get updated. You might be thinking that this is silly, of course the two know what the file is, it was just uploaded. Consider the case of files being deleted or multiple instances of a file being uploaded.

When your container starts up, it connects to the rabbitMQ broker system. Mythic then tries to look up the associated payload type and, if it can find it, will update the running status. However, if Mythic cannot find the payload type, then it'll issue a "sync" message to the container. Similarly, when a container starts up, the first thing it does upon successfully connecting to the rabbitMQ broker system is to send its own synced data.

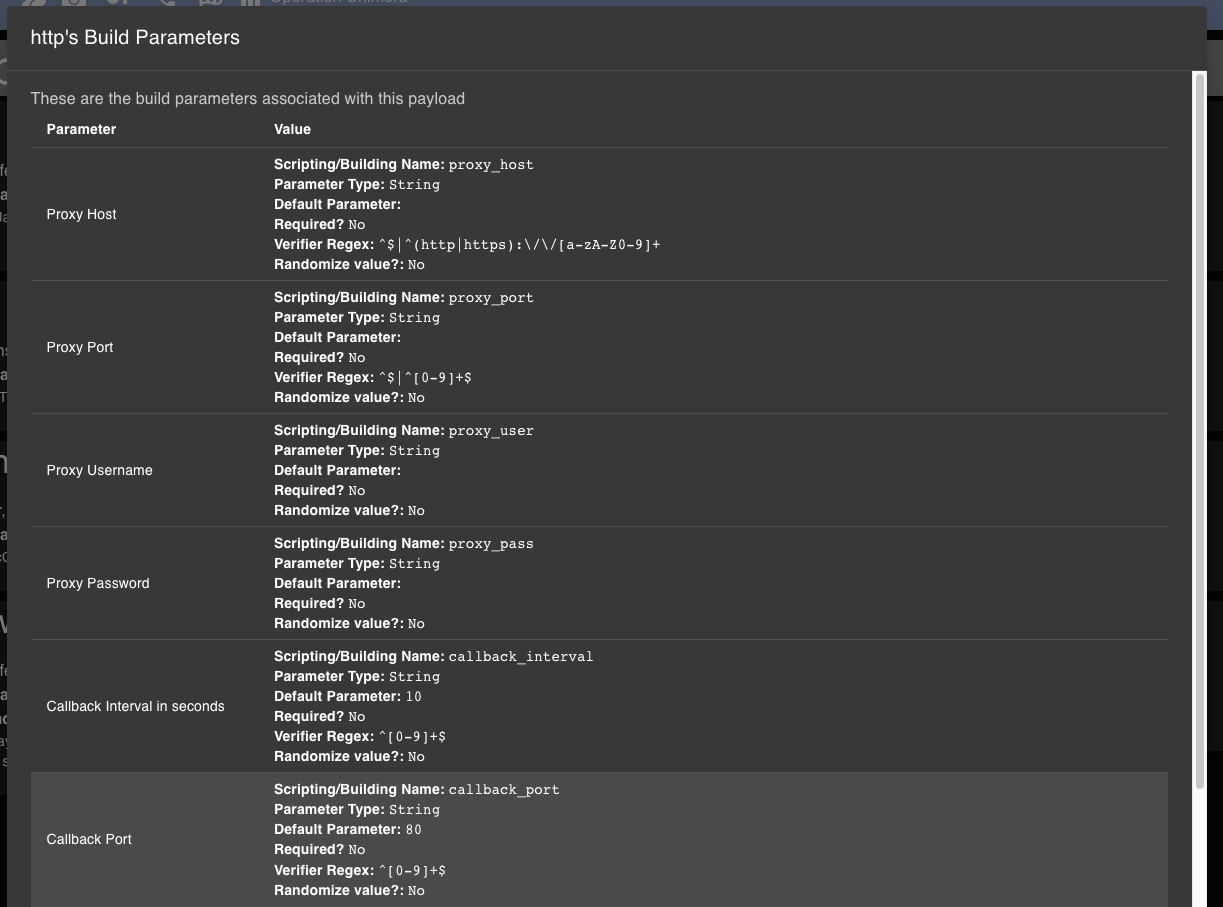

This data is simply a JSON representation of everything about your payload - information about the payload type, all the commands, build parameters, command parameters, browser scripts, etc.

Syncing happens at a few different times and there are some situations that can cause cascading syncing messages.

When a payload container starts, it sends all of its synced data down to Mythic

If a C2 profile syncs, it'll trigger a re-sync of all Payload Type containers. This is because a payload type container might say it supports a specific C2, but that c2 might not be configured to run or might not have check-ed in yet. So, when it does, this re-sync of all the payload type containers helps make sure that every agent that supports the C2 profile is properly registered.

When a Wrapper Payload Type container syncs, it triggers a re-sync of all non-wrapper payload types. This is because a payload type might support a wrapper that doesn't exist yet in Mythic (configured to not start, hasn't checked in yet, etc). So, when that type does check in, we want to make sure all of the wrapper payload types are aware and can update as necessary.

Latest versions can always be found on the Mythic .

MITRE ATT&CK is a great way to track what both offense and defense are doing in the information security realm. To help Mythic operators keep track, each command can be tagged with its corresponding MITRE ATT&CK information:

There can be as many or as few mappings as desired for each command. This information is used in two different ways, but both located in the MITRE ATT&CK button at the top.

The "Fetch All Commands Mapped to MITRE" button takes this information to populate out what is the realm of possible with all of the payload types and commands registered within Mythic. This gives a coverage map of what could be done. Clicking each matrix cell gives a breakdown of which commands from which payload types achieve that objective:

The "Fetch All Issued Tasks Mapped to MITRE" only shows this information for commands that have already been executed in the current operation. This shows what's been done, rather than what's possible. Clicking on a cell with this information loaded gives the exact task and command arguments that occurred with that task:

Internal documentation docker container

Mythic provides an additional docker container that serves static information about the agents and c2 profiles. In the main Mythic/.env file you'll see which port you wan to run this on via the "DOCUMENTATION_PORT" key. You don't need to worry about this port too much since Mythic uses an Nginx reverse proxy to transparently proxy connections back to this container based on the web requests you make.

The documentation stands up a Golang HTTP server based on Hugo (it's HTTP, not HTTPS). It reads all of the markdown files in the /Mythic/documentation-docker/ folder and creates a static website from it. For development purposes, if you make changes to the markdown files here, the website will automatically update in real time. From the Mythic UI if you hit /docs/ then you'll be directed automatically to the main documentation home page.

This page tracks presentations / webinars about Mythic

All information is tracked in the MythicMeta organization on GitHub available here: https://github.com/MythicMeta/Presentations/blob/main/README.md

Feb 23, 2022 - Mythic 2.3 & Apollo 2.0 Updates

Recording: Zoom Webinar

Slides: PDF

Payload types are the different kinds of agents that can be created and used with Mythic.

Payload type information is located in the C2 Profiles and Payload Types page by clicking the headphone icon in the top of the page.

From this initial high-level view, a few important pieces of information are shown:

Container status indicates if the backing container is online or offline based on certain RabbitMQ Queues existing or not. This status is checked every 5 seconds or so.

The name of the payload type which must be unique

Which operating systems the agent supports

The documentation container contains detailed information about the commands, OPSEC considerations, supported C2 profiles, and more for each payload type when you install it. From the Payload Types page, you can click the blue document icon to automatically open up the local documentation website to that agent.

Command and Control (C2) profiles are the way an agent actually communicates with Mythic to get tasking and post responses. There are two main pieces for every C2 profile:

Server code - code that runs in a docker container to convert the C2 profile communication specification (twitter, slack, dropbox, websocket, etc) into the corresponding RESTful endpoints that Mythic uses

Agent code - the code that runs in a callback to implement the C2 profile on the target machine.

C2 profiles can be found by going to Payload Types and C2 Profiles (headphone icon) from the top navigational bar.

Each C2 profile is in its own docker container, the status of which is indicated on the C2 Profiles page.

Each docker container has a python or golang service running in it that connects to a RabbitMQ message broker to receive tasking. This allows Mythic to modify files, execute programs, and more within other docker containers.

The documentation container contains detailed information about the OPSEC considerations, traffic flow, and more for each container when you install the c2 profile. From the C2 Profiles page, you can click the blue document icon to automatically open up the local documentation website to that profile.

Actions are special messages that don't adhere to the normal message types that you see for the rest of the features in this section. There are only a handful of these messages:

Action: checkin - Initial checkin messages and key exchanges

Action: get_tasking - Getting tasking

Action: post_response - Sending tasking responses

Inside of this is where the features listed throughout this section appear

All installed docker containers are located at Mythic/InstalledServices/each with their own folder. The currently running ones can be checked with the sudo ./mythic-cli status . Check A note about containers for more information about them.

Containers allow Mythic to have each Payload Type establish its own operating environment for payload creation without causing conflicting or unnecessary requirements on the host system.

Payload Type containers only come into play for a few special scenarios:

Payload Creation

Tasking

Processing Responses

For more information on editing or creating new containers for payload types, see Payload Type Development.

All PayloadTypes get 2 commands for free - clear and help. The reason these two commands are 'free' is because they don't actually make it down to the agent itself. Instead, they cause actions to be taken on the Mythic server.

The clear command does just that - it clears tasks that are sitting in the queue waiting for an agent to pick them up. It can only get tasks that are in the submitted stage, not ones that are already to the processing stage because that means that an agent has already requested it.

clear - entering the command just like this will clear all of the tasks in that callback are in the appropriate stages.

clear all - entering the command just like this will clear all tasks you've entered on that callback that are in the appropriate stages.

clear # - entering the command just like this will attempt to clear the task indicated by the number after clear.

If a command is successfully cleared by this command before an agent can get to it, then that task will get an automated response stating that it was cleared and which operator cleared it. The clear task itself will get back a list of all the tasks it cleared.

The help command allows users to get lists of commands that are currently loaded into the agent. Just help gives basic descriptions, but help [command] gives users more detailed command information. These commands look at the loaded commands for a callback and looks at the backing Python files for the command to give information about usage, command parameters, and elevation requirements.

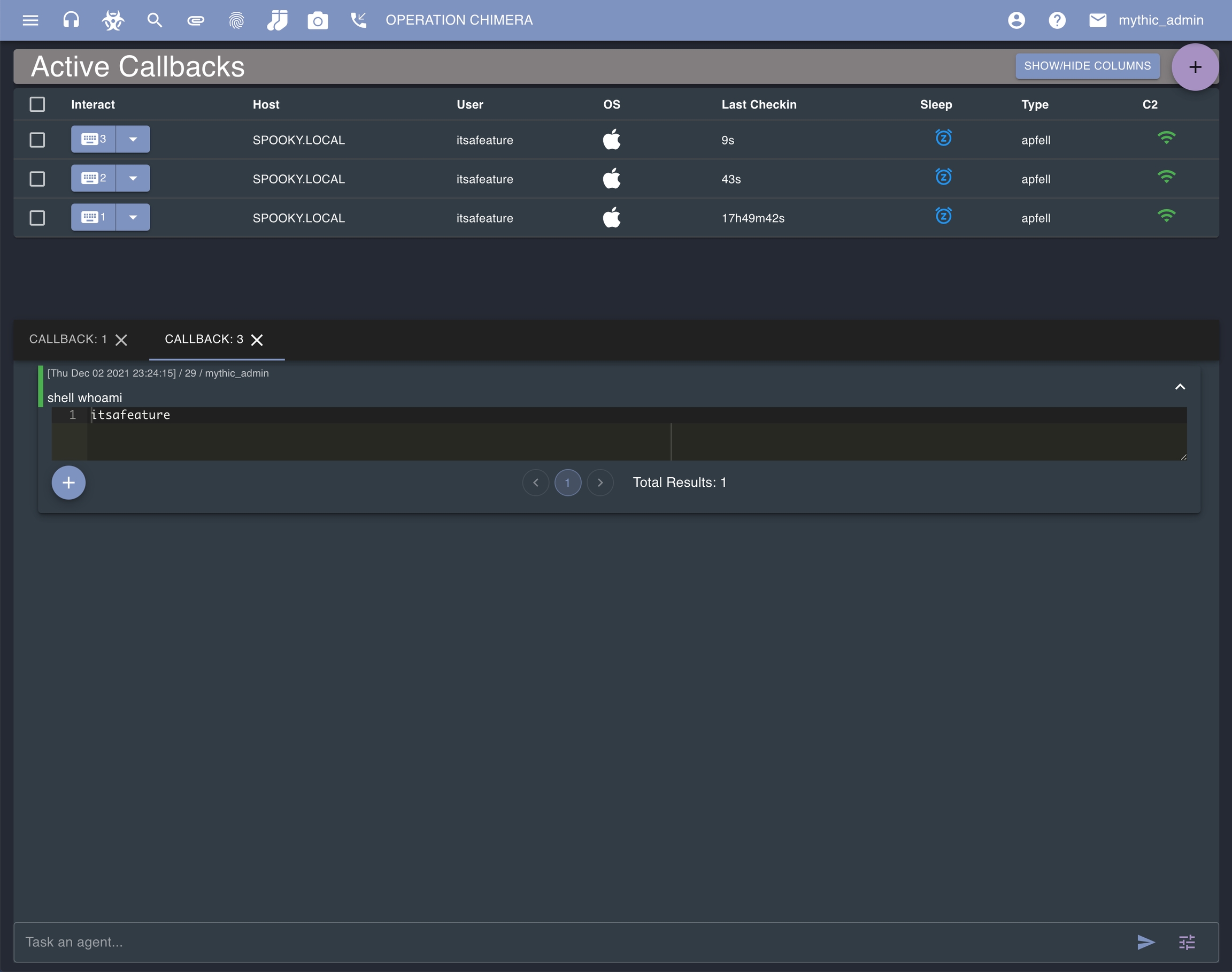

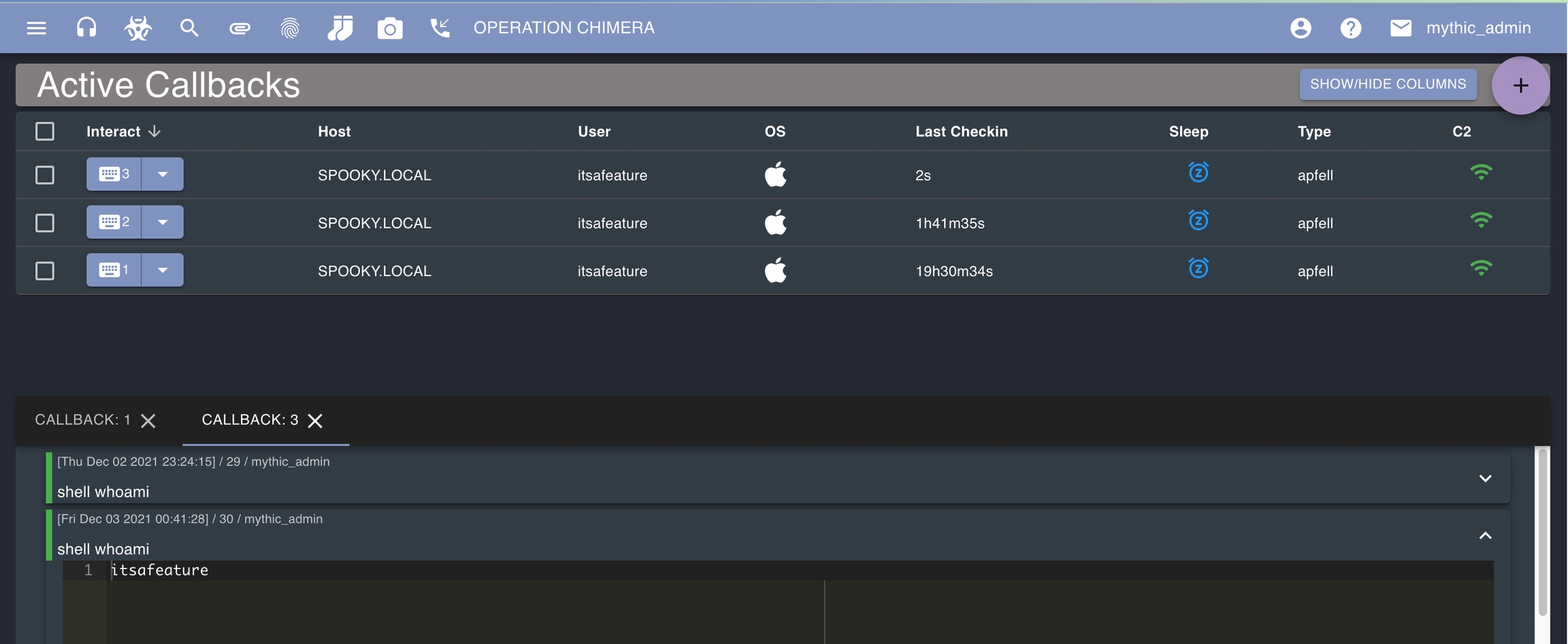

This section will highlight a few of the pieces of Mythic that operators are most likely to use on a daily basis.

Browser Scripts - use JavaScript to transform your command output into tables, buttons, links, and more

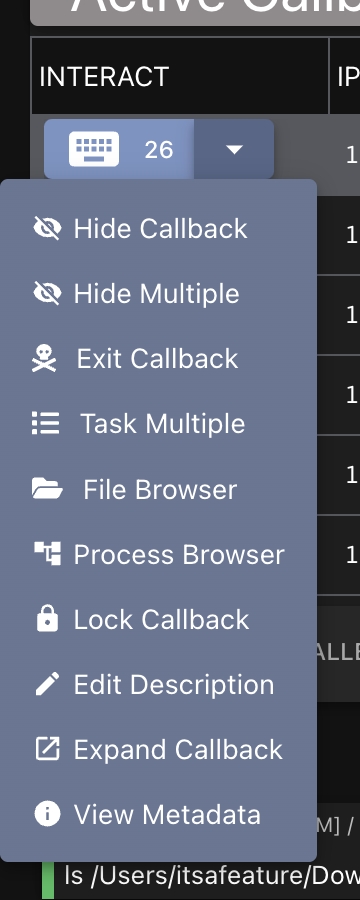

Active Callbacks - the main operational page for interacting with callbacks, also allows you to see graph/tree views of your callbacks

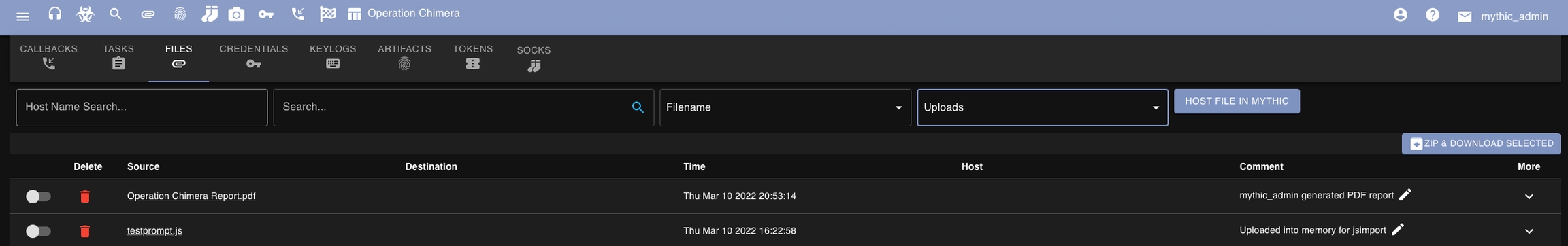

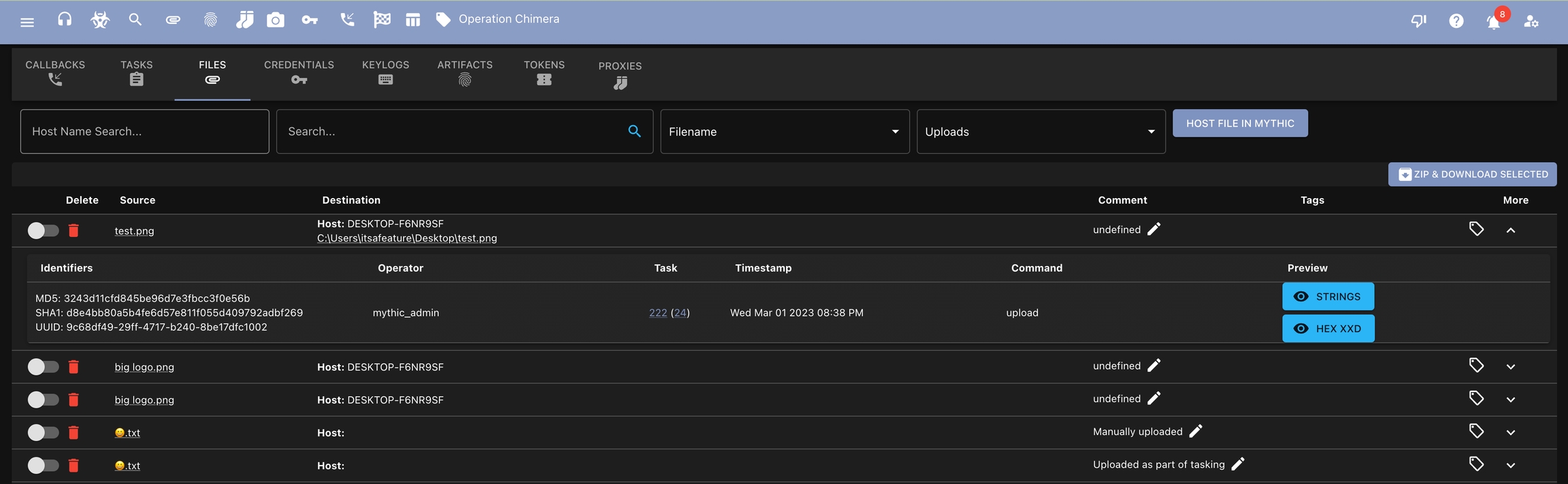

Files - view the uploads and downloads for the operation

Search - search commands, command parameters, and command output across the operation

Credentials - view/comment/edit/add credentials for your operation

Expanded Callbacks - this allows you to view callbacks as the full screen so that you have more operational screen space

Screencaptures - all of the screencaptures throughout the operation can be viewed and downloaded here

Event Feed - view all of the events going on throughout an operation (new payloads, new callbacks, users signing in, etc) as well as a basic chat program to send messages to all operators in the operation

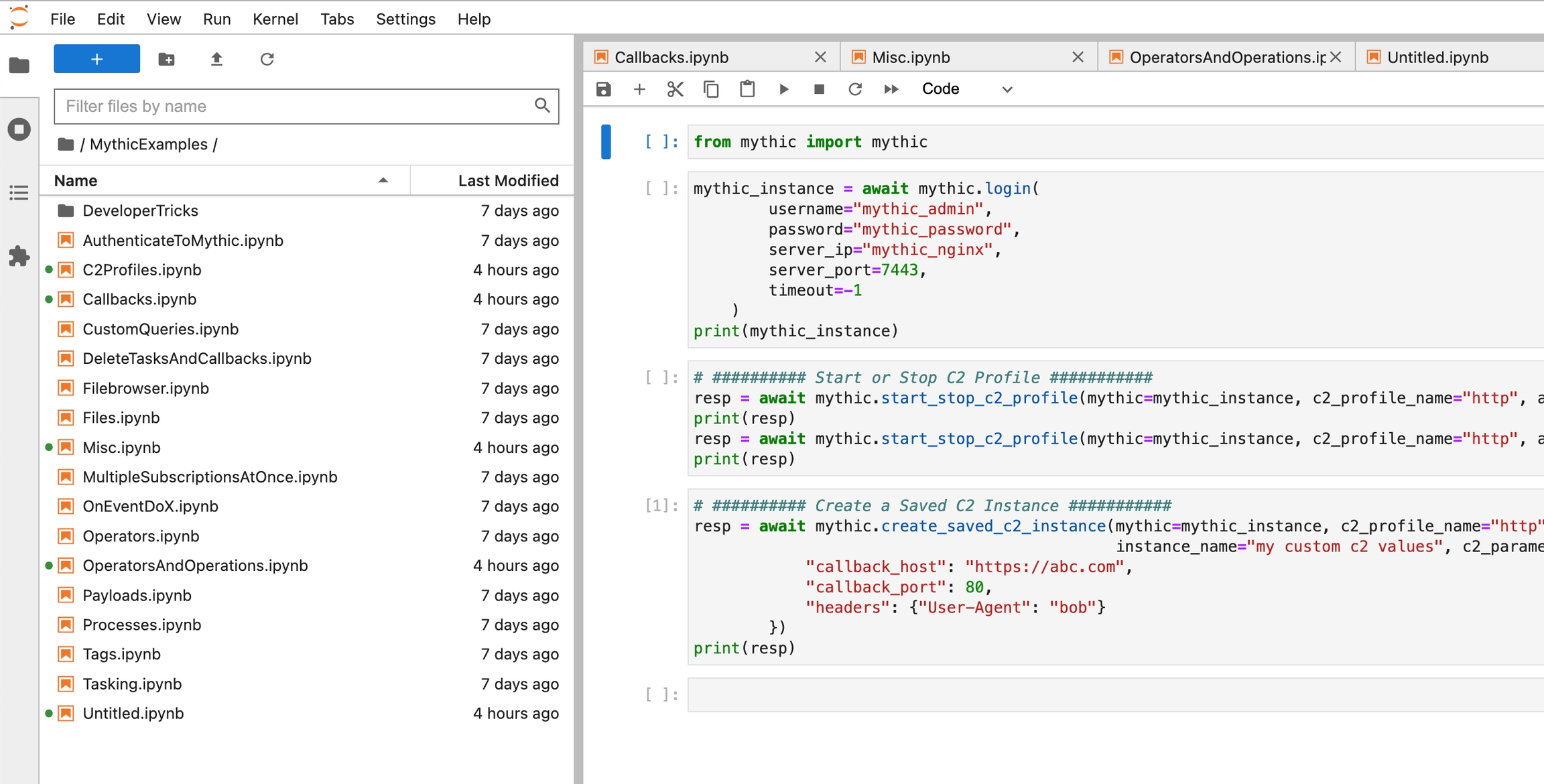

How to use the Scripting API

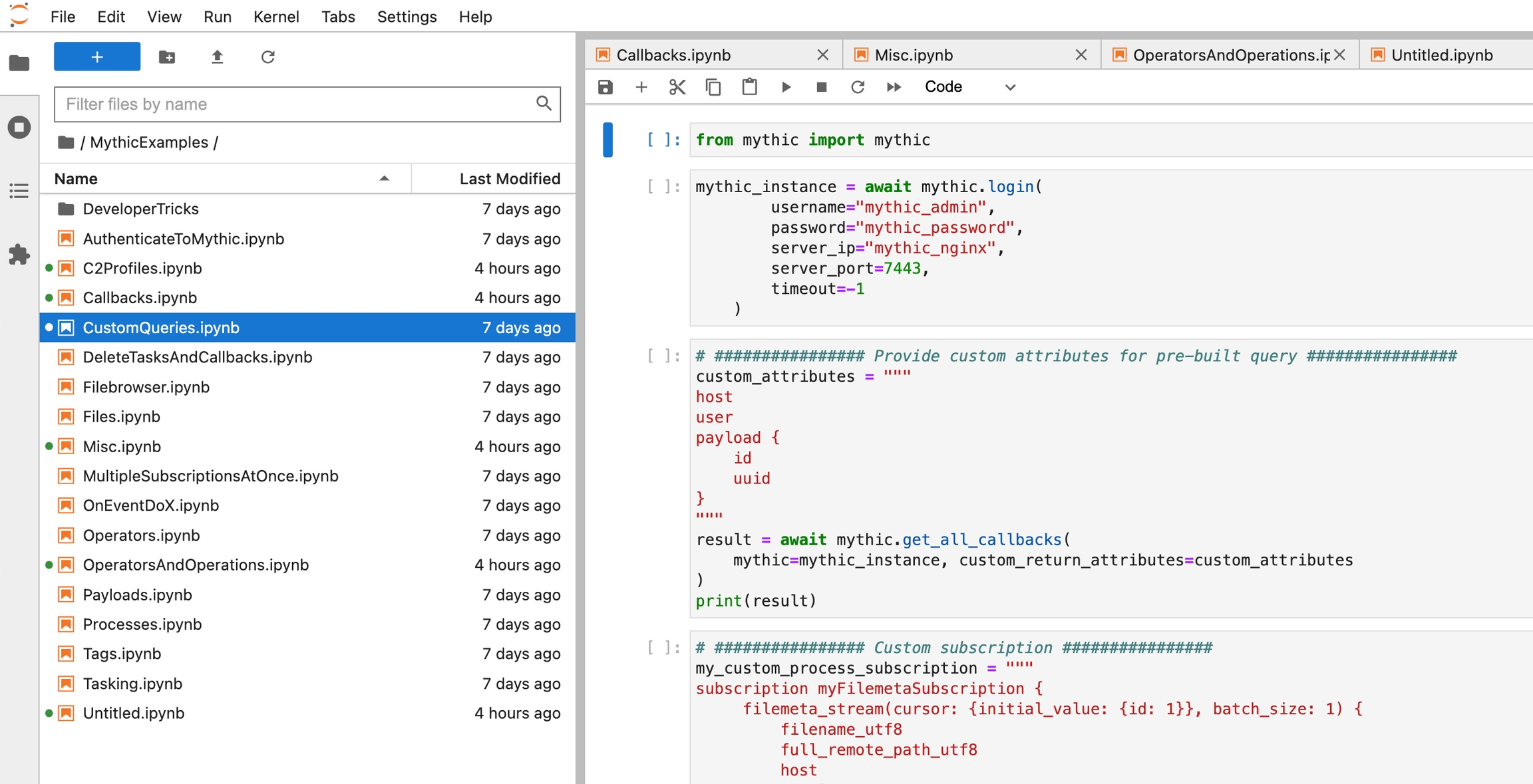

Mythic utilizes a combination of a GoLang Gin webserver and a Hasura GraphQL interface. Most actions that happen with Mythic go through the GraphQL interface (except for file transfers). We can hit the same GraphQL endpoints and listen to the same WebSocket endpoints that the main user interface uses as part of scripting, which means scripting can technically be done in any language.

Install the PyPi package via pip pip3 install mythic . The current mythic package is version 0.1.1. The code for it is public - https://github.com/MythicMeta/Mythic_Scripting

The easiest way to play around with the scripting is to do it graphically - select the hamburger icon (three horizontal lines) in the top left of Mythic, select "Services", then "GraphQL Console". This will open up /console in a new tab.

From here, you need to authenticate to Hasura - run sudo ./mythic-cli config get hasura_secret on the Mythic server and you'll get the randomized Hasura secret to log in. At this point you can browser around the scripting capabilities (API at the top) and even look at all the raw Database data via the "Data" tab.

The Jupyter container has a lot of examples of using the Mythic Scripting to do a variety of things. You can access the Jupyter container by clicking on the hamurber icon (three horizontal lines) in the top left of Mythic, select "Services", then "Jupyter Notebooks". This will open up a /jupyter in a new tab.

From here, you need to authenticate to Jupyter - run sudo ./mythic-cli config get jupyter_token on the Mythic server to get the authentication token. By default, this is mythic, but can be changed at any time.

You can also reference the Jupyter examples from the Mythic repo here: https://github.com/its-a-feature/Mythic/tree/master/jupyter-docker/jupyter.

An operator can provide non-default, but specific values for all of fields of a C2 profile and save it off as an instance. These instances can then be used to auto-populate all of the C2 profile's values when creating a payload so that you don't have to manually type them each time.

This is a nice time saver when creating multiple payloads throughout an operation. It's likely that in an operation you will have multiple different domains, domain fronts, and other external infrastructure. It's more convenient and less error prone to provide the specifics for that information once and save it off than requiring operators to type in that information each time when creating payloads.

The Save Parameters button is located next to each C2 profile by clicking the "headphones" icon at the top of the screen.

To create a new named instance, select the Save Instance button to the right of a c2 profile and fill out any parameters you want to change. The name must be unique at the top though.

If you're curious how reverse port forwards work within Mythic and how you can hook into them with an agent, check out the section on RPFWD development.

You probably noticed as you used Mythic that there's a status associated with your task. This status goes through a variety of words/colors depending on where things are in the pipeline and what the agent has done with the task. This provides a way for the operator to know what's happening behind the scenes.

By default, a task goes through the following stages with the following statuses:

preprocessing - The task is being sent to the Payload Type container for processing (parsing arguments, confirming values, doing RPC functionality to register files, etc)

submitted - The task is now ready for an agent to pick it up

processing - The task has been picked up by an agent, but there hasn't been any response back yet

processed - The task has at least one response, but the agent hasn't marked it as done yet.

completed - The task is marked as completed by the agent and there wasn't an error in execution

error:* - The agent report back a status of "error"

The agent can set the status of the task to anything it wants as part of its normal post_response information. Similarly, in a task's create_tasking function, you're free to set the task.status value. Anything of the error:* format will show up as red in the Mythic UI as an error for the user.

API tokens are special JSON web tokens (JWTs) that Mythic can create per-user that don't expire automatically. This allows you to do long-term scripting capabilities without having to periodically check if your current access-token is expired, going through the refresh process, and then continuing along with whatever you were doing.

They're located in your settings page (click your name in the top right and click settings).

When making a request with an API token, set the Header of apitoken with a value of your API token. This is in contrast to normal JWT usage where the header is Authorization and the value is Bearer: <token here>.

Mythic has a special page specifically for viewing screenshots by clicking the camera icon at the top of any of the pages.

When it comes to registering screenshots with Mythic, the process is almost identical to File Downloads (Agent -> Mythic); however, we set the is_screenshot flag to true in the download portion of the message:

{"action": "post_response", "responses": [

{

"task_id": "UUID here",

"download": {

"total_chunks": 4,

"full_path": "/test/test2/test3.file" // full path to the file downloaded

"host": "hostname the file is downloaded from"

"is_screenshot": true //indicate if this is a file or screenshot

}

}

]}So, you want to add a new command to a Payload Type. What does that mean, where do you go, what all do you have to do?

Luckily, the Payload Type containers are the source of truth for everything related to them, so that's the only place you'll need to edit. If your payload type uses its own custom message format, then you might also have to edit your associated translation container, but that's up to you.

Make a new .py file with your command class and make sure it gets imported before mythic_container.mythic_service.start_and_run_forever is called so that the container is aware of the command before syncing over.

This new file should match the requirements of the rest of the commands

Once you're done making edits, restart your payload type container via: ./mythic-cli start [payload type name]. This will restart just that one payload type container, reloading the python files automatically, and re-syncing the data with Mythic.

Make a new .go file with your new command struct instance. You can either do this as part of an init function so it gets picked up automatically when the package/file is imported, or you can have specific calls that initialize and register the command.

Eventually, run agentstructs.AllPayloadData.Get("agent name").AddCommand so that the Mythic container is aware that the command exists. Make sure this line is executed before your MythicContainer.StartAndRunForever function call.

This new file should match the requirements of the rest of the commands

Once you're done making edits, restart your payload type container via: ./mythic-cli build [payload type name]. This will rebuild and restart just that one payload type container and re-syncing the data with Mythic.

Agents can report back credentials they discover

{

"task_id": "task uuid here",

"user_output": "some user output here",

"credentials": [

{

"credential_type": "plaintext",

"realm": "spooky.local",

"credential": "SuperS3Cr37",

"account": "itsafeature"

}

]

}The agent can report back multiple credentials in a single response. The credential_type field represents the kind of credential and must be one of the following:

plaintext

certificate

hash

key

ticket

cookie

The other fields are pretty straightforward, but they must all be provided for each credential. There is one optional field that can be specified here: comment. You can do this manually on the credentials page, but you can also add comments to every credential to provide a bit more context about it.

If you're curious about interactive tasking, what it means, how it works, and how you can leverage it, check out the development section on it.

MythicRPC provides a way to execution functions against Mythic and Mythic's database programmatically from within your command's tasking files via RabbitMQ.

MythicRPC lives as part of the mythic_container PyPi package (and github.com/MythicMeta/MythicContainer GoLang package) that's included in all of the itsafeaturemythic Docker images. This PyPi package uses RabbitMQ's RPC functionality to execute functions that exist within Mythic.

The full list of commands can be found here: https://github.com/MythicMeta/MythicContainerPyPi/tree/main/mythic_container/MythicGoRPC for Python and https://github.com/MythicMeta/MythicContainer/tree/main/mythicrpc for GoLang.

See the C2 Related Development section for more SOCKS specific message details.

To start / stop SOCKS (or any interactive based protocol), use the SendMythicRPCProxyStart and SendMythicRPCProxyStop RPC calls within your Payload Type's tasking functions.

For SOCKS, you want to set LocalPort to the port you want to open up on the Mythic Server - this is where you'll point your proxy-aware tooling (like proxychains) to then tunnel those requests through your C2 channel and out your agent. For SOCKS, the RemotePort and RemoteIP don't matter. The PortType will be CALLBACK_PORT_TYPE_SOCKS (i.e. socks).

Browser Scripts allow users to script the output of agent commands. They are JavaScript functions that can return structured data to indicate for the React user interface to generate tables, buttons, and more.

Browser Scripts are located in the hamburger icon in the top left -> "Operations" -> BrowserScripts.

Every user has the default browser scripts automatically imported upon user creation based on which agents are installed.

Anybody can create their own browser scripts and they'll be applied only to that operator. You can also deactivate your own script so that you don't have to delete it, but it will no longer be applied to your output. This deactivates it globally and takes affect when the task is toggled open/close. For individual tasking you can use the speed dial at the bottom of the task and select to "Toggle Browserscript".

Click Register New Script to create a new one. This is for one-off scripts you create. If you want to make it permanent across databases and for other operators, then you need to add the script to the corresponding Payload Type's container. More information about that process can be found here: .

When you're creating a script, the function declaration will always be function(task, responses) where task is a JSON representation of the current task you're processing and responses is an array of the responses displayed to the user. This will always be a string. If you actually returned JSON data back, be sure to run JSON.parse on this to convert it back to a JSON dictionary. So, to access the first response value, you'd say responses[0].

You should always return a value. It's recommended that you do proper error checking and handling. You can check the status of the task by looking at the task variable and checking the status and completed attributes.

Even if a browser script is pushed out for a command, its output can be toggled on and off individually.

This is a quick primer on using Mythic for the first time

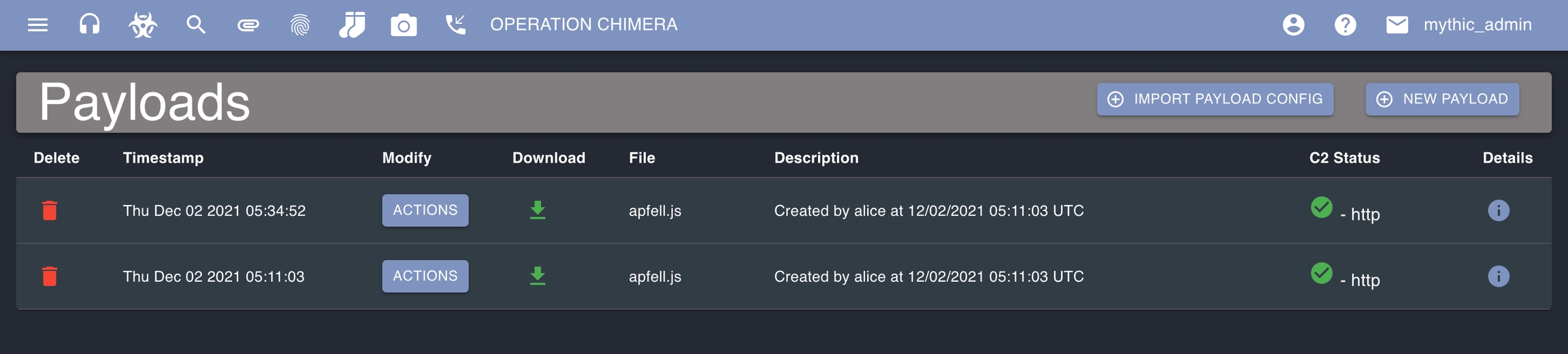

This section will quickly go from first connection to running a basic agent. This walkthrough assumes you have the apfell agent and the http c2 profile installed.

When you log in with the admin account, you'll automatically have your current operation set to the default operation. Your current operation is indicated in the top bar in big letters. When other operators sign in for the first time, they won't have an operation set to their current operation. You can always click on the operation name to get back to the operations management page (or click the hamburger icon on the left and select operations on the side).

You need a payload to use. Click the hazard icon at the top and then select "New Payload" on the top right of the new screen. You can also get here by selecting the hamburger icon on the top left and selecting "Create" -> "Create Payload".

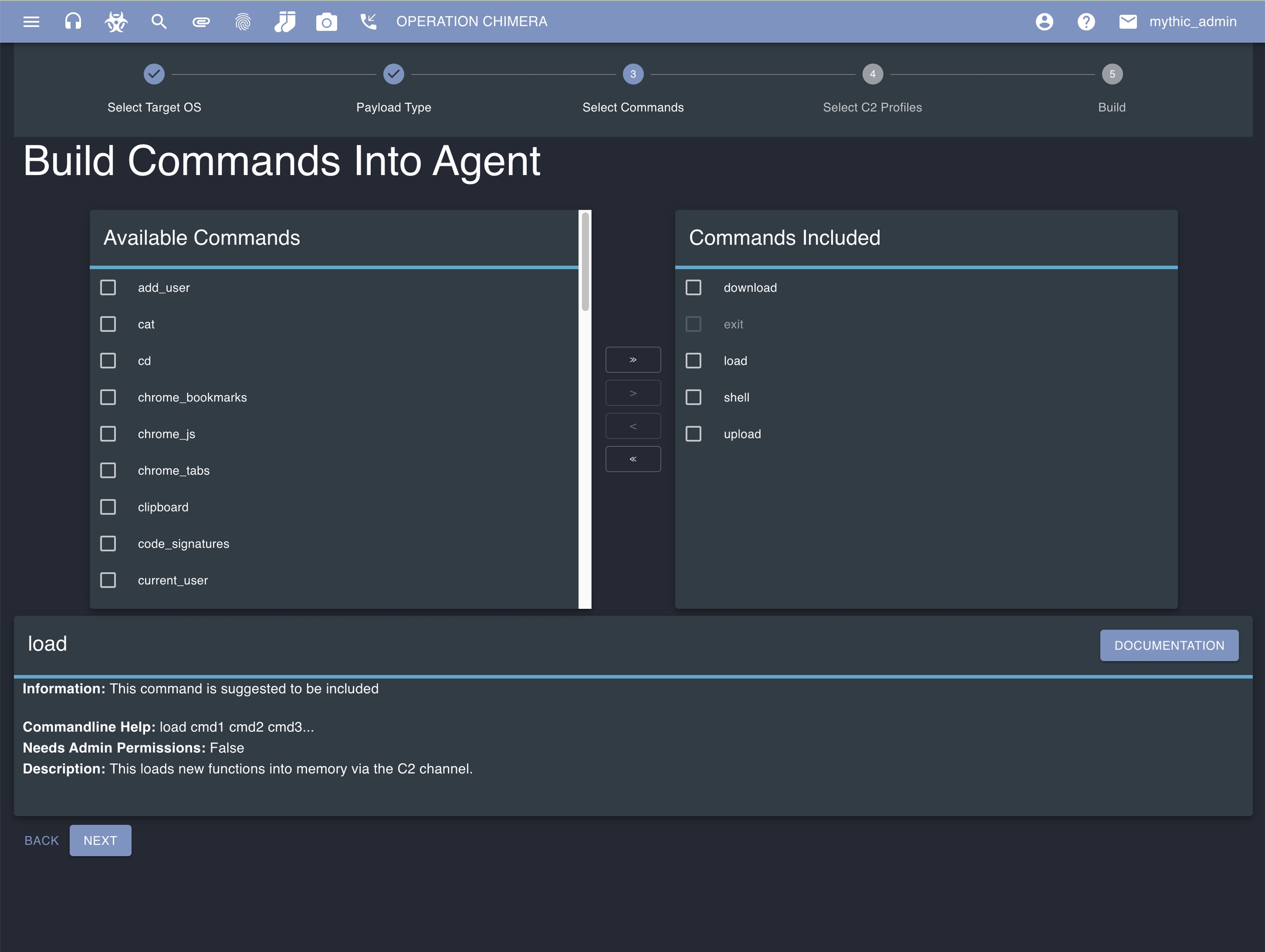

You'll be prompted to select which operating system. This is used to filter down possible payloads to generate. Next select the payload type you're wanting to build and fill out any necessary build parameters for the agent. Select any commands you want stamped into the payload initially. This will show commands not yet selected on the left and commands already selected on the right. There are some that can be pre-selected for you based on the agent developer (some are built in and can't be removed, some suggested, etc). If you hover over any of the commands you can see descriptive information about them. You can potentially load commands in later, but for this walkthrough select all of them. Click Next.

For c2 profiles, toggle the HTTP profile. Change the Callback host parameter to be where you want the agent to connect to (if you're using redirectors, you specify that here), similarly specify the Callback port for where you want the agent to connect to.

Provide a name for the agent (a default one is auto populated) and provide a description that will auto populate the description field for any callbacks created based on this payload. Click Next.

Once you click submit, you'll get a series of popups in the top giving feedback about the creation process. The blue notification popups will go away after a few seconds, but the green success or red error messages must be manually dismissed. This provides information about your newly created agent.

Click the hazard icon on the top again to go to the created payloads page.. This is where you'll be able to see all of the payloads created for the current operation. You can delete the payload, view the configuration, or download the payload. For this walkthrough, download the payload (green download icon).

Now move the payload over to your target system and execute it. The apfell.js payload can be run with osascript and the file name on macOS. Once you've done that, head to the Active Callbacks page from the top navigation bar via the phone icon.

This is where you'll be able to interact with any callback in the operation. Click the button for the row with your new agent to bring up information in the bottom pane where you can type out commands and issue them to the agent.

Mythic allows you to track types of tags as well as instances of tags. A tag type would be something like "contains credential" or "objective 1" - these take a name, a description, and a color to be displayed to the user. An instance of a tag would then include more detailed information such as the source of the information, the actual credential contained or maybe why that thing is tagged as "objective 1", and can even include a link for more information.

Tagging allows more logical grouping of various aspects of an operation. You can create a tag for "objective 1" then apply that tag to tasks, credentials, files, keylogs, etc. This information can then be used for easier deconflictions, attack path narratives, and even a way to signal information to other members of your assessment that something might be worth while to look at.

The tag icon at the top of the screen takes you to the tag management page where you can view/edit/create various types of tags and see how many times that tag is used in the current operation.

Tags are available throughout the various Mythic pages - anywhere you see the tag icon you can view/edit/add tags.

Credentials can be found from the search page on the top navigation bar or by clicking the key icon at the top.

As part of command output, credentials can be registered automatically if the agent parses out the material. Otherwise, users can also manually register credentials. There are a few pieces of information required:

The type of credential - This is more for situational awareness right now, but in the future will help the flow of how to treat the credential before use.

Account - the account this credential applies to

Realm - the domain for the credential or a generic realm in case this is a credential for something else. If the account is a local account, the Domain is the name of the computer.

Credential - the actual credential

Comment - any comment you want to store about the credential

On this page you can also see the task that created credentials (which can be Manual Entry ), who added in the credential, and when it was added.

Command parameters can hook into this by having a parameter type of CredentialJson - the tasking UI will get a dropdown for the various credentials to choose from and your create_go_tasking function will get a dictionary of all the credential's information.

Tasks can register credentials with the server in their responses by following Credentials format.

Here we can see that the operator selects the different payload options they desire in the web user interface and clicks submit. That information goes to Mythic which looks up all the database objects corresponding to the user's selection. Mythic then registers a payload in a building state. Mythic sends all this information to the corresponding Payload Type container to build an agent to meet the desired specifications. The corresponding build command parses these parameters, stamps in any required user parameters (such as callback host, port, jitter, etc) and uses any user supplied build parameters (such as exe/dll/raw) to build the agent.

In the build process, there's a lot of room for customizing. Since it's all async through rabbitMQ, you are free to stamp code together, spin off subprocesses (like mono or go) to build your agent, or even make web requests to CI/CD pipelines to build the agent for you. Eventually, this process either returns an agent or some sort of error. That final result gets send back to Mythic via rabbitMQ which then updates the database and user interface to allow an operator to download their payload.

How does this process work if there's a translation container involved though?

Notice how the only real difference here is that IF the payload type definition says for MythicEncrypts=False and there's a translation container, then it's up to the translation container to generate any encryption keys. These keys can be part of a C2 Profile or they could be part of a payload type's build parameters. This is why you see this flow happening in two places. Other than that, when it comes to building a payload, the translation container has very little interaction.

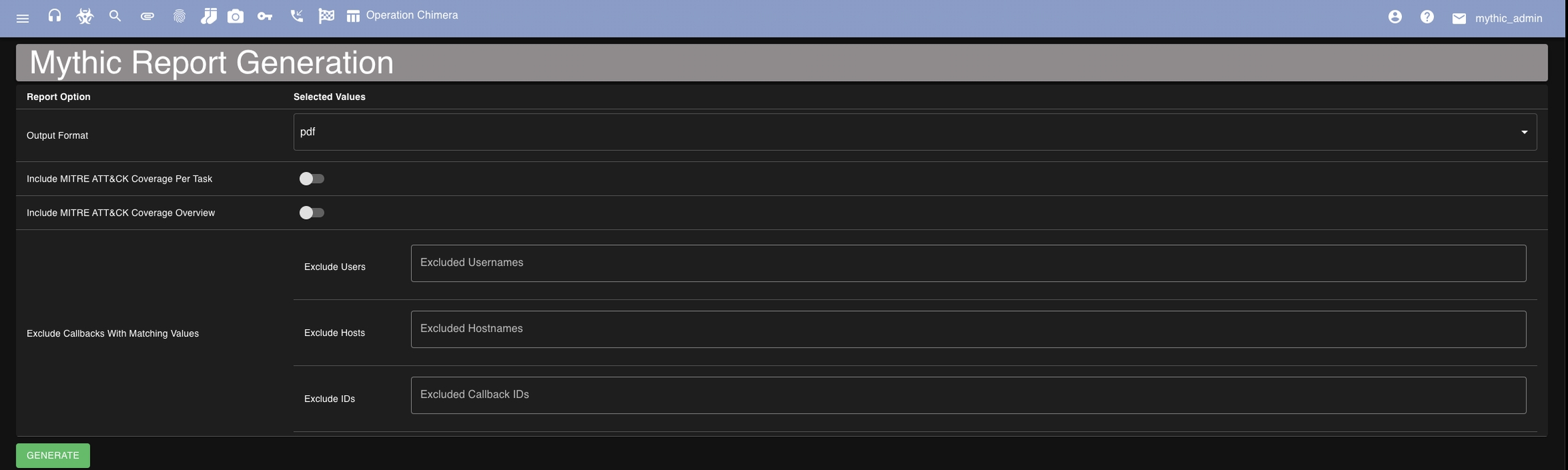

Mythic can generate JSON or XML style reports. If you need a PDF version, simply generate the XML, open it up locally, and then in your browser save it off to PDF.

Report generation is located from the checker flag icon from the top navigation bar.

You can select your output format, if you want to include MITRE ATT&CK mappings inline with each tasking and if you want a MITRE ATT&CK Summary at the end. You can also optionally exclude certain callbacks, usernames, and hostnames from being included in the generated report.

The final generated report can be downloaded from this screen when it's ready via a toast notification. If you navigate away before it's done though, the report is also always available from the "files" section of the search page (click the paper clip icon at the top and select "Uploads" instead of "Downloads").

Here we can see an agent sends a message to Mythic. The C2 Profile container is simply a fancy redirector that know show to pull the message off the wire, it doesn't do anything else than that. From there, Mythic starts processing the message. It pulls out the UUID so it can determine which agent/callback we're talking about. This is where a decision point happens:

If the Payload Type associated with the payload/callback for the UUID of the message has a translation container associated with it, then Mythic will send the message there. It's here that the rest of the message is converted from the agent's special sauce C2 format into the standard JSON that Mythic expects. Additionally, if the Payload Type handles encryption for itself, then this is where that happens.

If there is not translation container associated with the payload/callback for the UUID in the message, then Mythic moves on to the next step and starts processing the message.

Mythic then processes the message according to the "action" listed.

Mythic then potentially goes back to the translation container to convert the response message back to the agent's custom C2 spec before finally returning everything back through the C2 Profile docker container.

For any active callback, select the dropdown next to it and select "Expand Callback". This will open a new tab for that callback where you can actually view the tasking full screen with metadata on the side.

When you're downloading a file from the Agent to Mythic (such as a file on disk, a screenshot, or some large piece of memory that you want to track as a file), you have to indicate in some way that this data is specific to a file and not destined to be part of the information displayed to the user. The way this works is pretty much the inverse of what happens for uploads. Specifically, an agent has a file it wants to transfer, so it tells Mythic "i have data, i'm gonna send it as X chunks of size Y, can you give me a UUID so we can track this". Mythic tracks the data and gives back a UUID. Now the agent sends each chunk individually and Mythic can track it. This allows a single task to be able to send back multiple files concurrently or sequentially and still track it all.

There are two kinds of C2 profiles - egress profiles that talk directly out of the target network or peer-to-peer (p2p) profiles that talk to neighboring agents.

The default HTTP and the dynamicHTTP profiles are both examples of egress profiles. They talk directly out of the target network. Egress profiles have associated Docker containers that allow you to do the translation between your special sauce c2 profile and the normal RESTful web requests to the main Mythic server. More information on how this works and how to create your own can be found here: C2 Related Development.

Peer-to-peer profiles in general are a bit different. They don't talk directly out to the internet; instead, they allow agents to talk to each other.

This distinction between P2P and Egress for Mythic is made by a simple boolean indicating the purpose of the c2 container.

P2P profiles announce their connections to Mythic via P2P Connections. When Mythic gets these messages, it can start mapping out what the internal mesh looks like. To help view this from an operator perspective, there is an additional views on the main Callbacks page.

This view uses a directed graph to illustrate the connections between the agents. There's a central "Mythic Server" node that all egress agents connect to. When a route is announced, the view is updated to move one of the callbacks to be a child of another callback.

The event feed is a live feed of all events happening within Mythic. This is where Mythic records messages for new callbacks, payload creations, users signing in/out, etc

The event feed is located at the alarm bell icon in the top right.

The event feed is a running list of all that's going on within an operation. If Mythic has an error, these will be recorded in the event log with a red background and a button to allow "resolution" of the problem. If you resolve the problem, then the background will change to green. You can also delete messages as needed:

In the Mythic UI, you can click the hamburger icon (three horizontal lines) in the top left to see the current Server version and UI version.

There are three scenarios to updating Mythic: updates to the patch version (1.4.1 to 1.4.2), updates to the minor (1.4.1 to 1.5), or major version (1.4 to 2.0).

In all updates, after a git pull you should run make to get the latest mythic-cli binary.

This is when you're on version 1.2 for example and want to pull in new updates (but not a new minor version like 1.3 or 1.4). In this case, the database schema should not have changed.

Pull in the latest code for your version (if you're still on the current version, this should be as easy as a git pull)

Make a new mythic-cli binary with sudo make

Restart Mythic to pull in the latest code changes into the docker containers with sudo ./mythic-cli start

This is when you're on version 1.2 for example and want to upgrade to version 1.3 or 2.1 for example. In this case, the database schema has changed.

Starting with Mythic 3.1, we now have database migrations within PostgreSQL. You should be fine to git pull and rebuild everything. It's important that you rebuild so that server changes are pulled in for the various services that updated. This means that if you're on Mythic 3.0.0-rc* and want to upgrade to Mythic 3.1.0, you'll automatically get database migrations to help with this.

Note: I always highly recommend backing everything up if you plan to update a production system. Just in case something happens, you'll be able to revert.

You will have some down time while this happens (the containers need to rebuild and start back up), so make sure whatever you're doing can handle a few seconds to a few minutes of down time.

Pull in the latest code for your version (if you're still on the current version, this should be as easy as a git pull)

Make a new mythic-cli binary with sudo make

Restart Mythic to pull in the latest code changes into the docker containers with sudo ./mythic-cli start. If you have in your .env to not rebuild on start, then you will need to change that first.

Since Mythic now has all of the C2 Profiles and Payload Types split out into different GitHub Organizations ( and ), you might need to update those projects as well.

Agents and C2 Profiles are hosted in their own repositories, and as such, might have a different update schedule than the main Mythic repo itself. So, you might run into a scenario where you update Mythic, but now the current Agent/C2Profiles services are no longer supported.

You'll know if they're no longer supported because when the services check in, they'll report their current version number. Mythic has a range of supported version numbers for Agents, C2 Profiles, Translation services, and even scripting. If something checks in that isn't in the supported range, you'll get a warning notification in the UI about it.

To update these (assuming that the owner/maintainer of that Agent/C2 profile has already done the updates), simply stop the services (sudo ./mythic-cli stop agentname or sudo ./mythic-cli stop profileName) and run the install command again. The install command should automatically determine that a previous version exists, remove it, and copy in the new components. Then you just need to either start those individual services, or restart mythic overall.

Deleting Database

If you want to wipe the database and upgrade, the following steps will help:

Reset the database with sudo ./mythic-cli database reset

Make sure Mythic is stopped, sudo ./mythic-cli stop

Purge all of your containers, sudo docker system prune -a

Pull in the version you want to upgrade to (if you're wanting to upgrade to the latest, it's as easy as git pull)

Make a new mythic-cli binary with sudo make.

Delete your Mythic/.env file - this file contains all of the per-install generated environment variables. There might be new environment variables leveraged by the updated Mythic, so be sure to delete this file and a new one will be automatically generated for you.

Restart Mythic to pull in the latest code changes into the docker containers with sudo ./mythic-cli start

MITRE ATT&CK () is an amazing knowledge base of adversary techniques.

MITRE ATT&CK® is a globally-accessible knowledge base of adversary tactics and techniques based on real-world observations. The ATT&CK knowledge base is used as a foundation for the development of specific threat models and methodologies in the private sector, in government, and in the cybersecurity product and service community.

With the creation of ATT&CK, MITRE is fulfilling its mission to solve problems for a safer world — by bringing communities together to develop more effective cybersecurity. ATT&CK is open and available to any person or organization for use at no charge.

This is in development to bring into the new user interface. This is still tracked by the back-end and available via reporting, but the ATT&CK matrix itself still needs to be ported over to the new React interface.

Commands can be automatically tagged with MITRE ATT&CK Techniques (this is what populates the "Commands by ATT&CK" output). To locate this, you just need to look at the associated python/golang files for each command.

In addition to this file defining the general properties of the command (such as parameters, description, help information, etc). There's a field called attackmapping that takes an array of MITRE's T# values. For example, looking at the apfell agent's download command:

When this command syncs to the Mythic server, those T numbers are stored and used to populate the ATT&CK Matrix. When you issue this download command, Mythic does a lookup to see if there's any MITRE ATT&CK associations with the command, and if there are, Mythic creates entries for the "Tasks by ATT&CK" mappings. This is why you're able to see the exact command associated.

As long as you're keeping with the old MITRE ATT&CK mappings, simply add your T# to the list like shown above, then run sudo ./mythic-cli start [agent name]. That'll restart the agent's container and trigger a re-sync of information. If the container is using golang instead of python for its Mythic connectivity, then you need to run sudo ./mythic-cli build [agent name] instead.

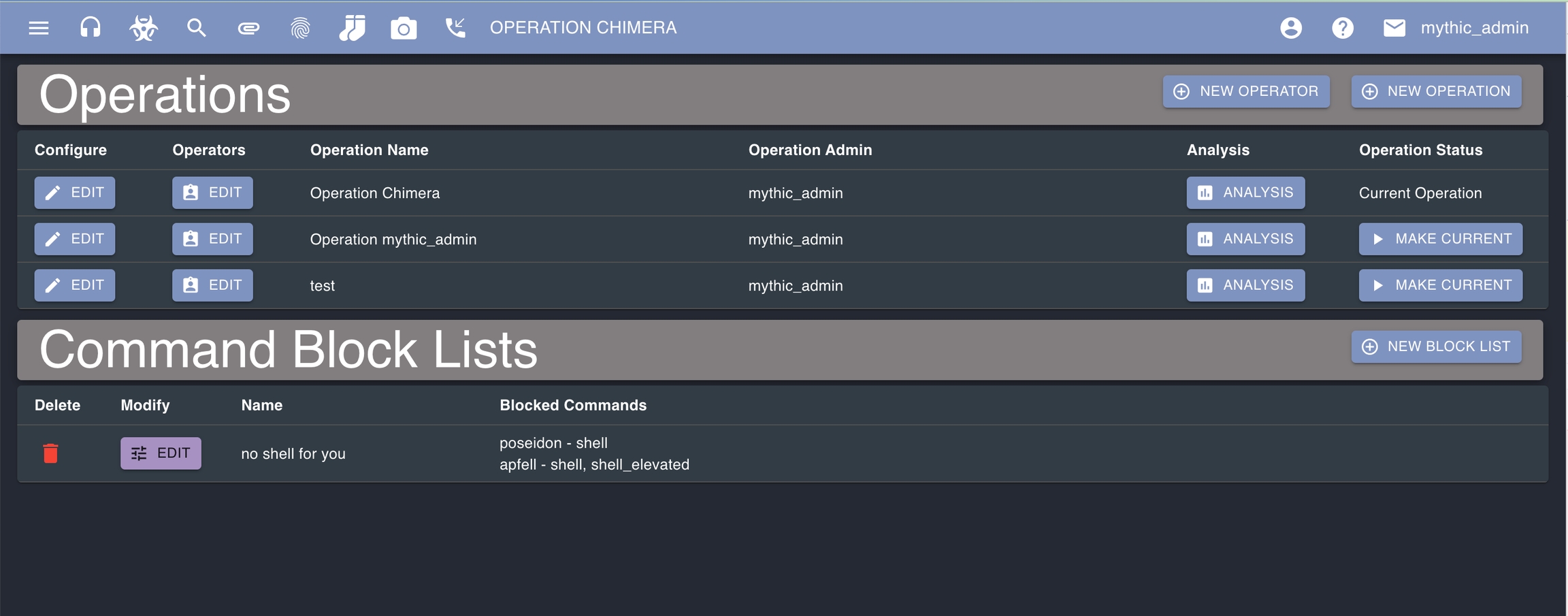

Operations are collections of operators, payloads, tasks, artifacts, callbacks, and files. While payload types and c2 profiles are shared across an entire Mythic instance, operations allow fine grained control over the visibility and access during an assessment.

Operation information can be found via the hamburger icon in the top left, then selecting "Operations" -> "Modify Operations" page. If you're a global Mythic admin, you'll see all operations here. Otherwise, you'll only see operations that are associated with your account.

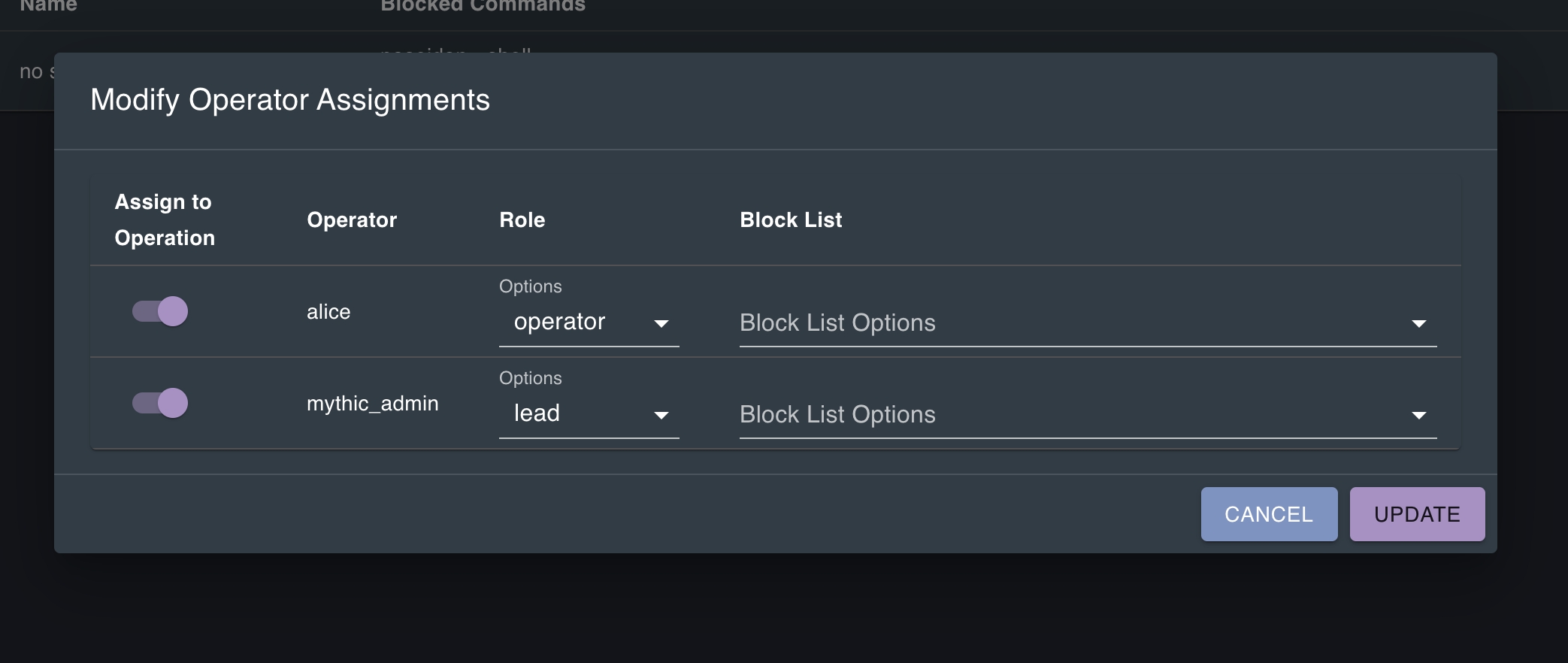

Every operation has at least one member - the lead operator. Other operators can be assigned to the operation with varied levels of access.

operator is your normal user.

lead is the lead of that operation

spectator can't do anything within Mythic. They essentially have Read-Only access across the entire operation. They can't create payloads, issue tasking, add comments, send messages, etc. They can search and view callbacks/tasking, but that's it.

For more fine-grained control than that listed above, you can also create block lists. These are named lists of commands that an operator is not allowed to execute for a specific payload type. These block lists are then tied to specific operators. This offers a middle-ground between normal operator with full access and a spectator with no access. You can edit these block lists via the yellow edit button.

For the configure button for the operation, there are many options. You can specify a Slack webhook along with the channel. By default, whenever you create a payload via the "Create Payloads" page, it is tagged as alert-able - any time a new callback is created based on that payload, this slack webhook will be invoked. If you want to prevent that for a specific payload, go to the payloads page, select the "Actions" dropdown for the payload in question, and select to stop alerting. If you have the Slack webhook set on the operation overall, other payloads will continue to generate alerts, but not the ones you manually disable. You can always enable this feature again in the same way.

For the operators edit button, you can edit who is assigned to the operation, what their roles are, and specify which (if any) block lists should be assigned to that user.

Because many aspects of an assessment are tied to a specific operation (payloads, callbacks, tasks, files, artifacts, etc), there are many things that will appear empty within the Mythic UI until you have an operation selected as your current operation. This lets the Mythic back-end know which data to fetch for you. If you don't have an operation as your active one, then you'll see no operation name listed on the top center of your screen. Go to the operations page and, if you're assigned to an operation that you can see, you can select to "Make Current".

Socks proxy capabilities are a way to tunnel other traffic through another protocol. Within Mythic, this means tunneling other proxy-aware traffic through your normal C2 traffic. Mythic specifically leverages a modified Socks5 protocol without authentication (it's going through your C2 traffic afterall).

The Mythic server runs within a Docker container, and as such, you have to define which ports to expose externally. Mythic/.env has a special environment variable you can use to expose a range of ports at a time for this exact reason - MYTHIC_SERVER_DYNAMIC_PORTS="7000-7010". By default this uses ports 7000-7010, but you can change this to any range you want and then simply restart Mythic to make the changes.

Click the main Search field at the top and click the "Socks" icon on the far right (or click the socks icon at the top bar).

When you issue a command to start a socks proxy with Mythic, you specify an action "start/stop" and a port number. The port number you specify is the one you access remotely and leverage with your external tooling (such as proxychains).

An operator issues a command to start socks on port 3333. This command goes to the associated payload type's container which does an RPC call to Mythic to open that port for Socks.

Mythic opens port 3333 in a go routine.

An operator configures proxychains to point to the Mythic server on port 3333.

An operator runs a tool through proxychains (ex: proxychains curl https://www.google.com)

Proxychains connects to Mythic on port 3333 and starts the Socks protocol negotiations.

The tool sends data through proxychains, and Mythic stores it in memory. In this temporary data, Mythic assigns each connection its own ID number.

The next time the agent checks in, Mythic takes this socks data and hands it off to the agent as part of the normal or process.

The agent checks if it's seen that ID before. If it has, it looks up the appropriate TCP connection and sends off the data. If it hasn't, it parses the Socks data to see where to open the connection. Then sends the resulting data and same randomID back to Mythic via .

Mythic gets the response, parses out the Socks specific data, and sends it back to proxychains

The above is a general scenario for how data is sent through for Socks. The Mythic server itself doesn't look at any of the data that's flowing - it simply tracks port to Callback mappings and shuttles data appropriately.

Don't forget to change the sleep interval of your agent back to your normal intervals when you're done with Socks so that you reduce the burden on both the server and your agent.

The database schema describes the current state of Mythic within the mythic_postgres Docker container. Mythic tracks everything in the Postgres database so that if an operator needs to close their browser or the server where Mythic runs reboots, nothing is lost. The benefit of having all data tracked within the database and simply streamed to the operator's interface means that all operators stay in sync about the state of the operation - each operator doesn't have to browse all of the file shares themselves to see what's going on and you don't have to grep through a plethora of log files to find that one task you ran that one time.

The database lives in the postgres-docker folder and is mapped into the mythic_postgres container as a volume. This means that if you need to move Mythic to a new server, simply stop mythic with ./mythic-cli stop, copy the Mythic folder to its new home, and start everything back up again with ./mythic-cli start.

On the first start of Mythic, the database schema is loaded from a schema file located in mythic-docker: .

Since the database schema is the source of truth for all of Mythic, mythic scripting, and all of the operator's interfaces, it needs to be easily accessible in a wide range of cases.

The mythic_server container connects directly to the mythic_postgres container to sync the containers and quickly react to agent messages. The mythic_graphql container (Hasura) also directly connects to the database and provides a GraphQL interface to the underlying data. This GraphQL interface is what both the React UI and mythic scripting use to provide a role-based access control (RBAC) layer on top of the database.

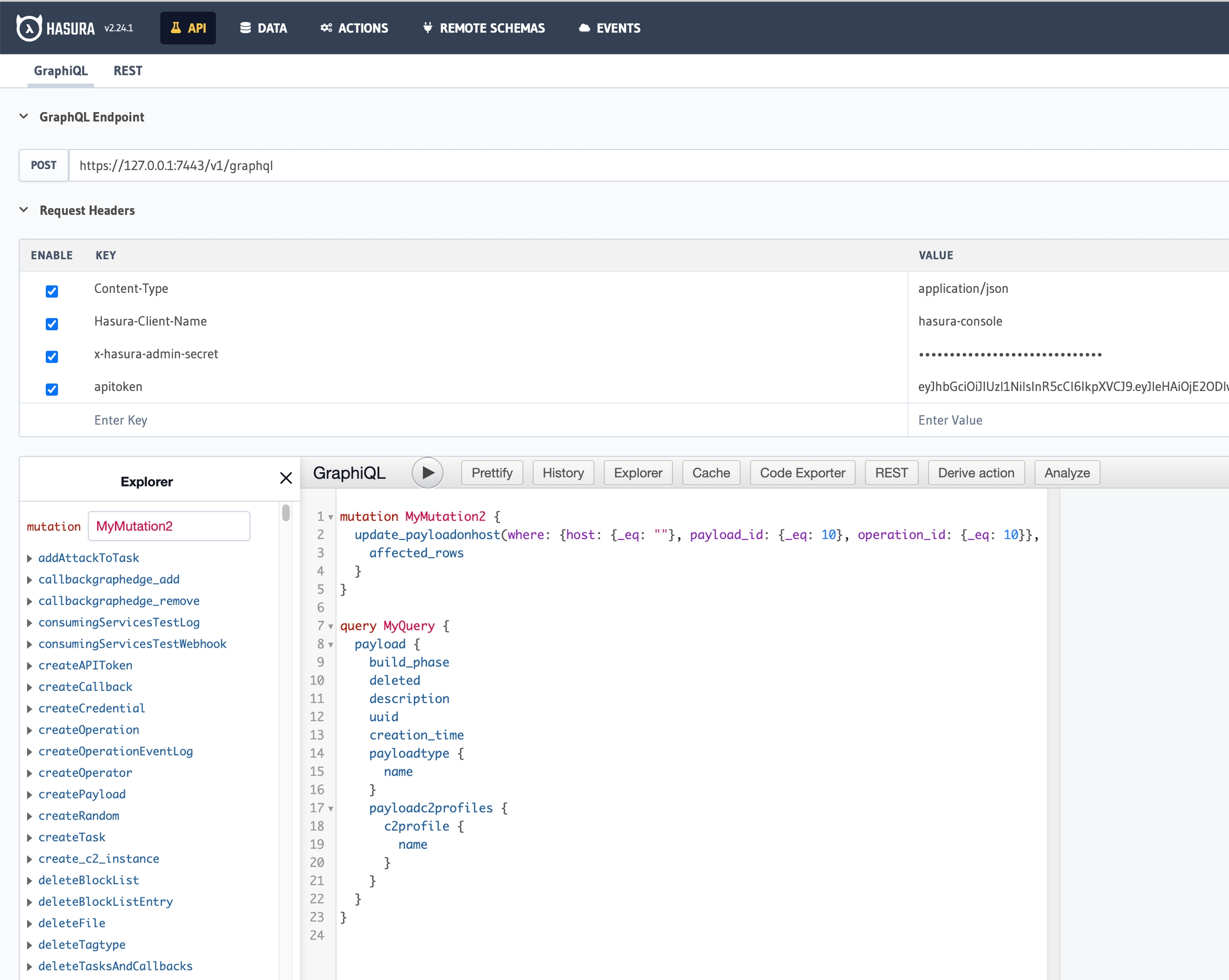

How do you, as an operator or developer, find out more about the database schema? The easiest way is to click the hamburger icon in the top left of Mythic, select "Services", and then select the "GraphQL Console". This drops you into the Hasura Login screen; the password for Hasura can be found randomly generated in your Mythic/.env file.

From here, the API tab, shown below, provides an easy way to dynamically explore the various queries, subscriptions, and modifications you can make to the database right here or via scripting.

Since the Mythic Scripting simply uses this GraphQL interface as well, anything you put in that center body pane you can submit as a POST request to Mythic's grapqhl endpoint (shown above) to achieve the same result. The majority of the functions within Mythic Scripting are simply ease-of-use wrappers around these same queries.

If you want to have even more fun exploring how the GraphQL interface manipulates the database schema, you can check out the built-in Jupyter Notebook and test out your modifications there as well. As shown in the two screenshots below, you can create scripts to interact with the GraphQL endpoints to return only the data you want.

This scripting, combined with the Hasura GraphQL console allows operators to very easily get direct access and real-time updates to the database without having to know any specific SQL syntax or worry about accidentally making a schema change.

C2 Profiles can optionally provide some operational security checks before allowing a payload to be created. For example, you might want to prevent operators from using a known-bad named pipe name, or you might want to prevent them from using infrastructure that you know is burned.

These checks all happen within a single function per C2 profile with a function called opsec:

From the code snippet above, you can see that this function gets in a request with all of the parameter values for that C2 Profile that the user provided. You can then either return success or error with a message as to why it passed or why it failed. If you return the error case, then the payload won't be built.

C2 servers know the most about their configuration. You can pass in the configuration for an agent and check it against the server's configuration to make sure everything matches up or get additional insight into how to configure potential redirectors.

C2 servers know the most about how their configurations work. You can pass in an agent's configuration and get information about how to generate potential redirector rules so that only your agent's traffic makes it through.

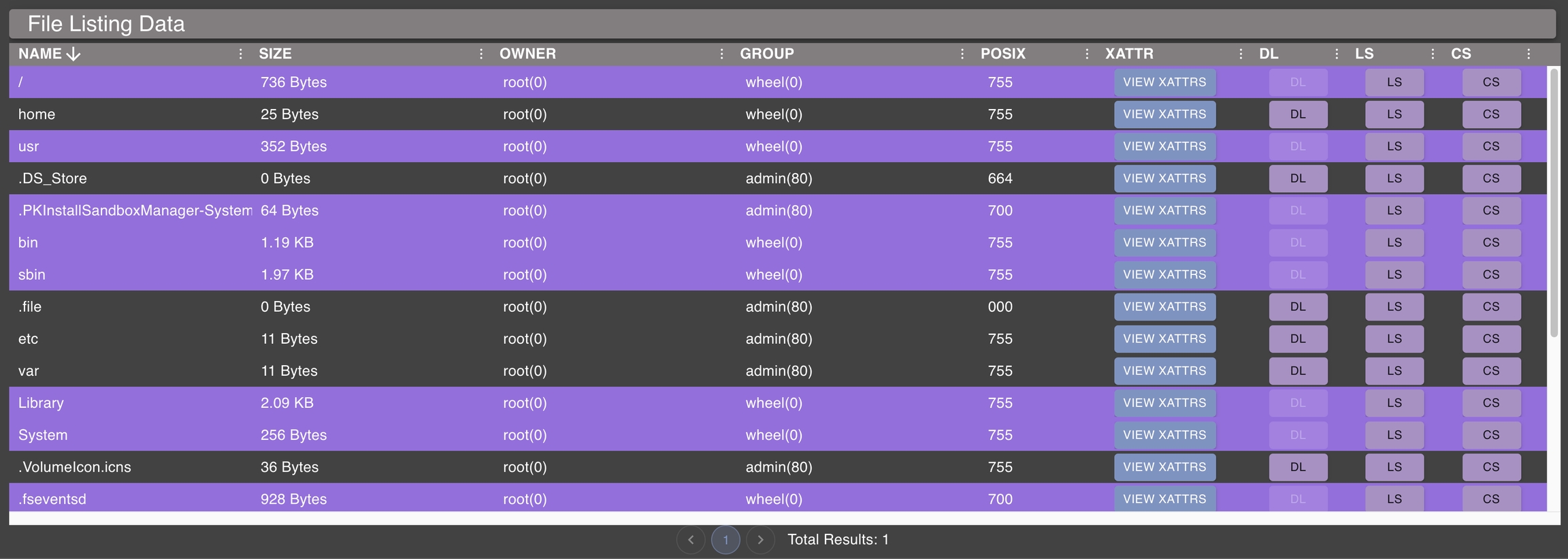

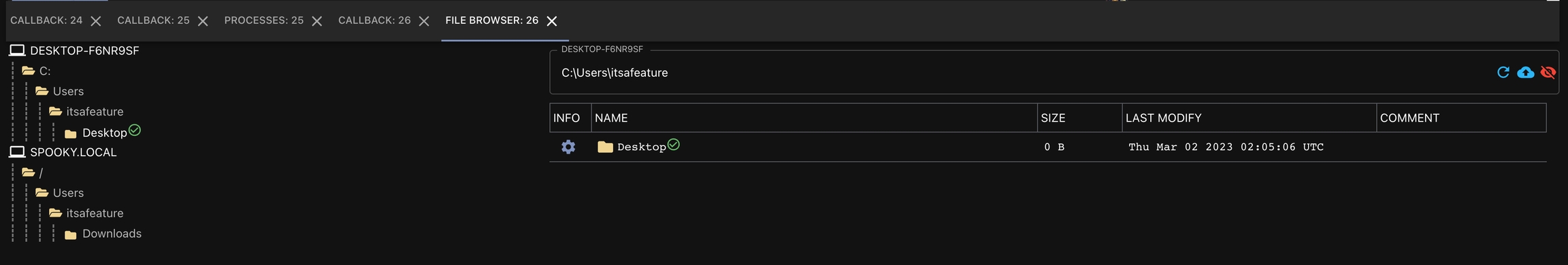

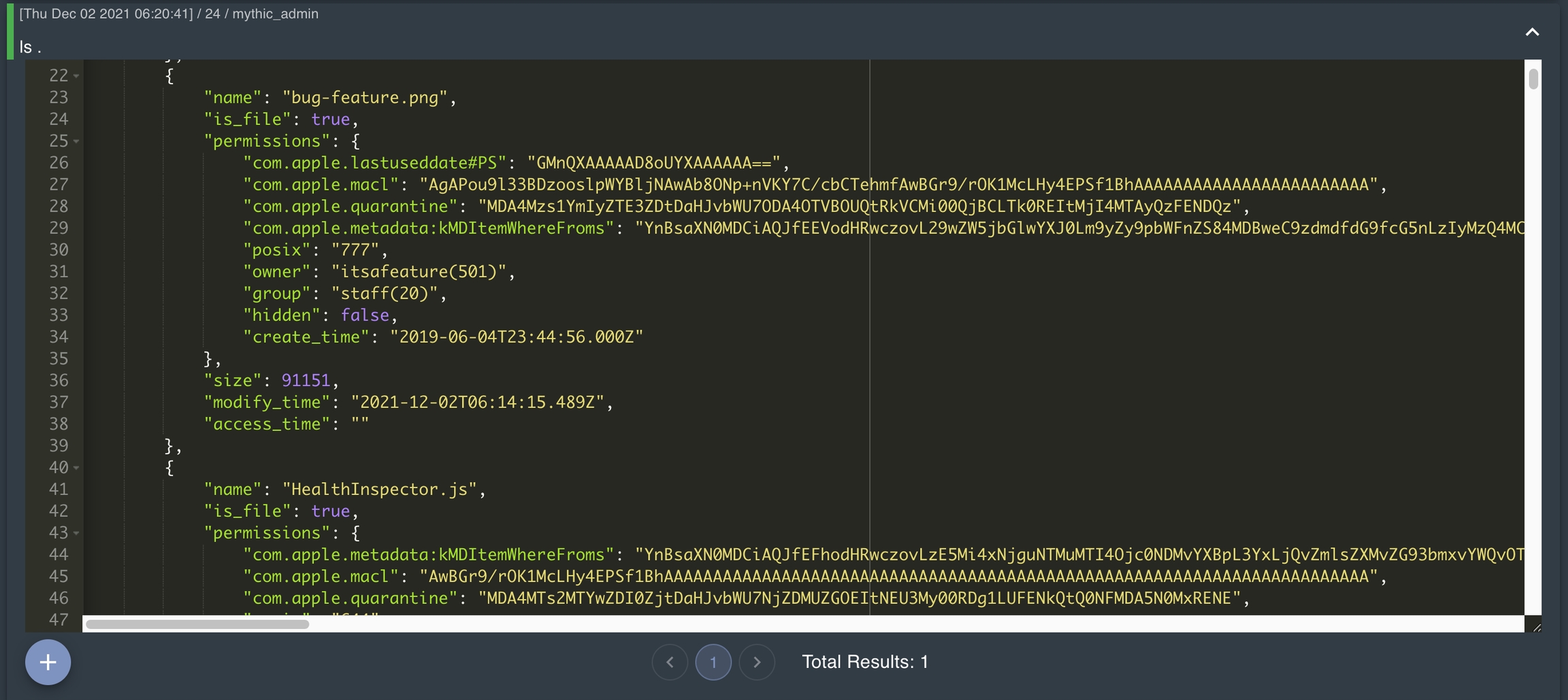

Unified, Persistent File Browser

The file browser is a visual, file browser representation of the directory listings that agents perform. Not all agents support this feature however.

From any callback dropdown in the "Active Callbacks" window, select "File Browser" and the view will be rendered in the lower-half of the screen. This information is a combination of the data across all of the callbacks, and is persistent.

The view is divided into two pieces - a graphical hierarchy on the left and a more detailed view of a folder on the right. The top layer on the left will be the hostname and everything below it will correspond to the file structure for that host.

You'll notice a green checkmark for the files folder. The green checkmark means that an agent reported back information for that folder specifically (i.e. somebody tasked an ls of that folder or issued a list command via the button on the table side). This is in contrast the other folders in that tree - those folders are "implicitly" known because we have the full path returned for the folder we did access. If there is a red circle with an exclamation point, it means that you tried to perform an ls on the directory, but it failed.

On the right hand side, the table view has a few pieces along the top:

The text field is the path associated with the information below with the corresponding hostname right above it. If you haven't received any information from any agent yet or you haven't clicked on a path, this will default to the current directory ..

The first button is the list button. This looks at the far right hand side Callback number, finds the associated payload type, then looks for the command with file_browser:list set in the command's supported_ui_features. Then issues that command with the host and path shown in the first two fields. If you want to list the contents of a directory that you can't see in the UI, just modify these two values and hit list.

The second button is the upload button. This will look for the file_browser:upload set in the supported_ui_features for a command and execute that command. In most cases this will cause a popup dialog where you can upload your file.

The last field allows you to toggle viewing deleted files or not.

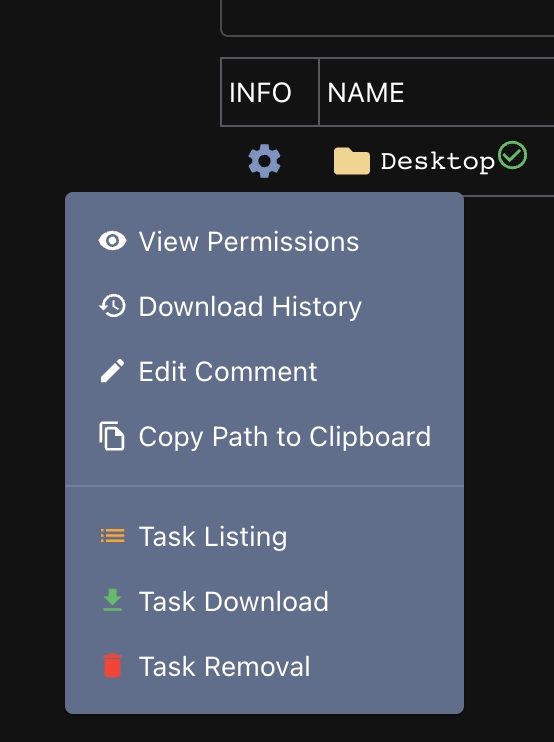

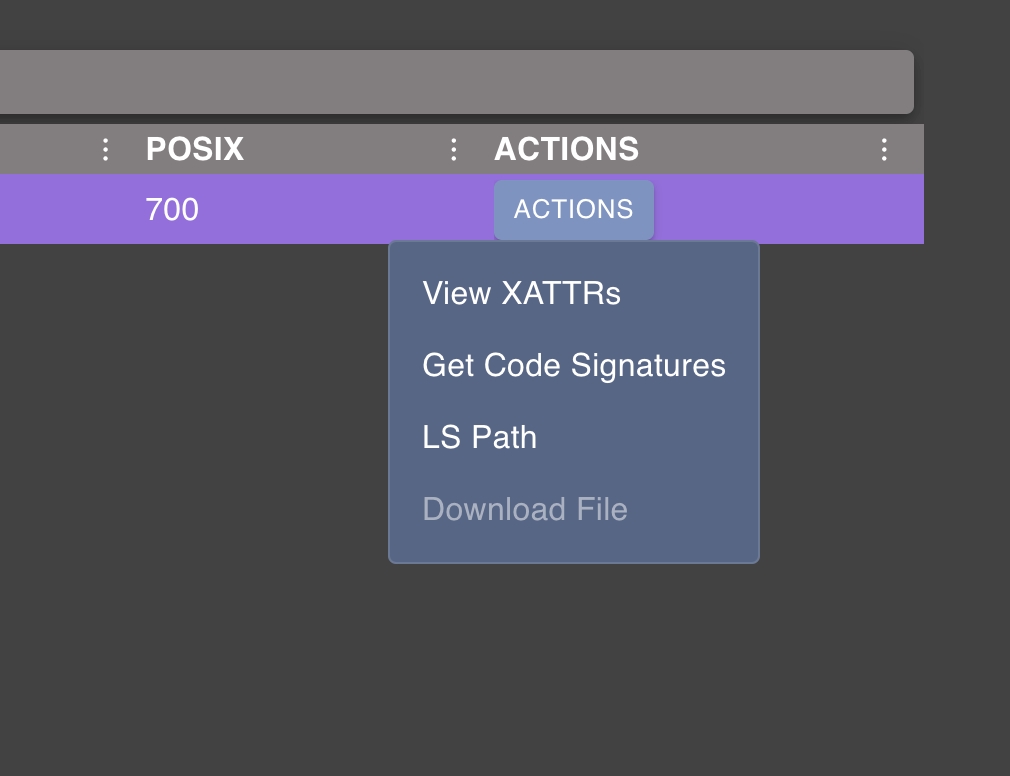

For each entry in the table menu on the right, there are some actions you can do by clicking the gear icon:

The file browser only shows some information that's returned. There are portions that are Operating Specific though - like UNIX permissions, extended attributes, or SDDLs. This information doesn't make sense to display in the main table, so clicking the View Permissions action will display a popup with more specific information.

The Download History button will display information about all the times that file has been downloaded. This is useful when you repeatedly download the same file over and over again (ex: downloading a user's Chrome Cookie's file every day). If you've downloaded a file, there will be a green download icon next to the filename. This will always point to the latest version of the file, but you can use the download history option to view all other instances in an easy pane. This popup will also show the comments associated with the tasks that issued the download commands.

The other three are self explanatory - tasking to list a file/folder, download a file, or remove a file/folder. If a file is removed and reports back the removal to hook into the file browser, then the filename will have a small trash icon next to it and the name will have a strikethrough.

You installed a service into Mythic that's not yours (agent, c2, webhook, etc), made a change, but you're not seeing it? That could be from forking a public agent, making changes in your own repo and installing it with ./mythic-cli install or just making local changes on disk. Luckily, there's a really easy solution to this.

This page walks through the various things covered in this blog post as well:

Docker containers are really amazing. They rely on "images" to create a kind of "snapshot" of a simulated VM and then turn that image into an instance of that running snapshot by creating a container. These images can do a lot of things and configure a lot of different components for you so that you can be absolutely sure that how something is set up in one environment matches another environment regardless of whatever else is installed or set up. To do this, when building the image, you identify packages to install, things to configure, build new binaries, etc.

The downside is that creating the image in the first place can be very taxing for a CPU and for the HDD. They can be building python from source and ballooning the size of intermediary layers all over the place. To help with this, some authors of Mythic services have opted to use remote images. This means that the images are already pre-built for the general case and hosted somewhere (GitHub, DockerHub, etc).

If you're ever curious about an agent using a remote image, you can check and look at the Docker Image column. If there's something there, then the service is going to use the image hosted there by default. If you want to check locally, you'll see three new variables in your .env about it. For example, let's say we installed Poseidon:

You'll see three new .env variables all prefixed with the name of the thing you installed.

The *_USE_BUILD_CONTEXT variable says whether or not to use the LOCAL build context to create an image or to instead use the specified *_REMOTE_IMAGE that's pre-built. This means that when this variable is false (the default), then no new local changes will be used and the pre-built image will simply be fetched and turned into a container. So, no matter how many local changes you make, you'll never see the changes.

Setting this to true means that the local Dockerfile will be used to generate the image you use for your container. It's most likely the case that this Dockerfile is set up to pull in your local changes when creating the image, rebuilding things as necessary. If it's not though, then your local Dockerfile will be used to generate a new local image, but it doesn't guarantee that your local changes are getting picked up. So, be sure to check the Dockerfile and if necessary, check for a .docker/Dockerfile that you might be able to copy from to make sure that your changes are used when generating the new image.

By default, to go with the remote image that's used, a volume will be created to hold any changes that the container makes. This means that when local things change (such as uploading a file into a container), it goes into the volume, not locally on disk where the service is installed.

If you want to see these things locally, then set this to false.

If you make a change to either of these two variables, you need to rebuild the container to make them apply. Simply run sudo ./mythic-cli build [name] and you should see your changes.

onContainerStart Functionality

OnContainerStartFunction and on_container_start are functions you can optionally implement in any container to get execution, per operation, when the container starts up. This is helpful when your container needs to do some housekeeping and prep an agent, c2 profile, or even eventing before anything else happens.

This function is one you can implement as part of the definition for your container (PayloadType, C2Profile, Eventing, etc).